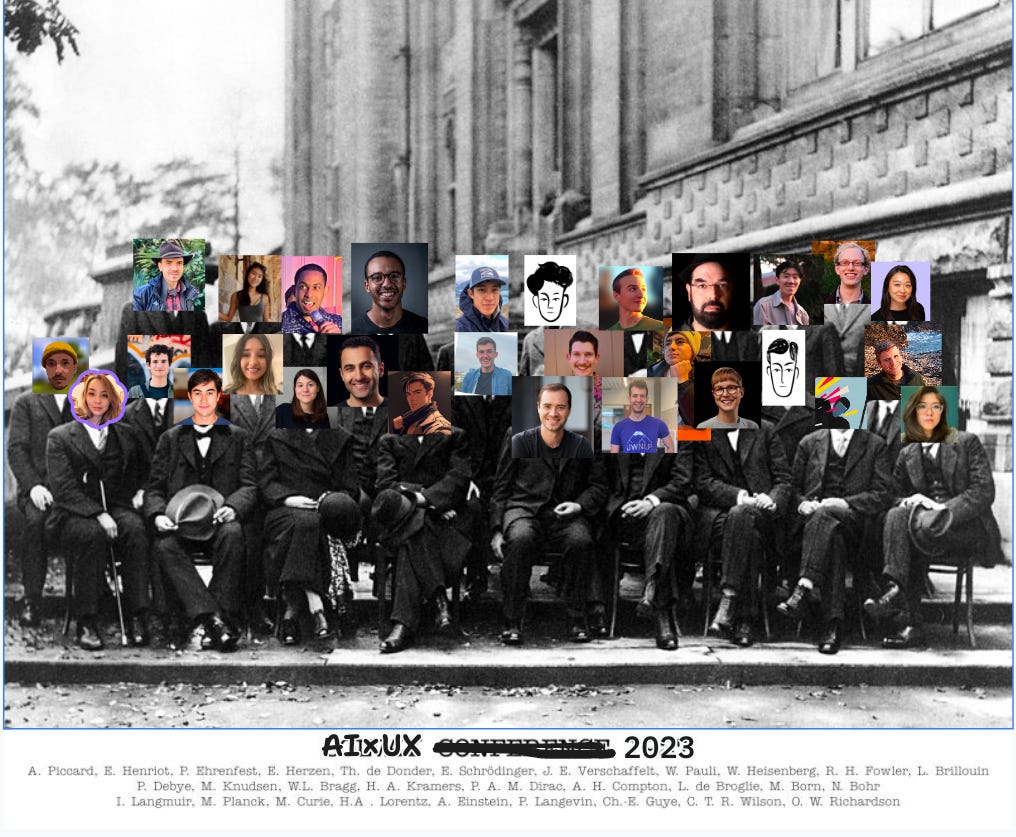

Latent Space: The AI Engineer Podcast — Practitioners talking LLMs, CodeGen, Agents, Multimodality, AI UX, GPU Infra and all things Software 3.0

Technology

Business

Alessio + swyx

The podcast by and for AI Engineers! In 2023, over 1 million visitors came to Latent Space to hear about news, papers and interviews in Software 3.0.

We cover Foundation Models changing every domain in Code Generation, Multimodality, AI Agents, GPU Infra and more, directly from the founders, builders, and thinkers involved in pushing the cutting edge. Striving to give you both the definitive take on the Current Thing down to the first introduction to the tech you'll be using in the next 3 months! We break news and exclusive interviews from OpenAI, tiny (George Hotz), Databricks/MosaicML (Jon Frankle), Modular (Chris Lattner), Answer.ai (Jeremy Howard), et al.

Full show notes always on https://latent.space www.latent.space

AGI is Being Achieved Incrementally (DevDay Recap - cleaned audio)

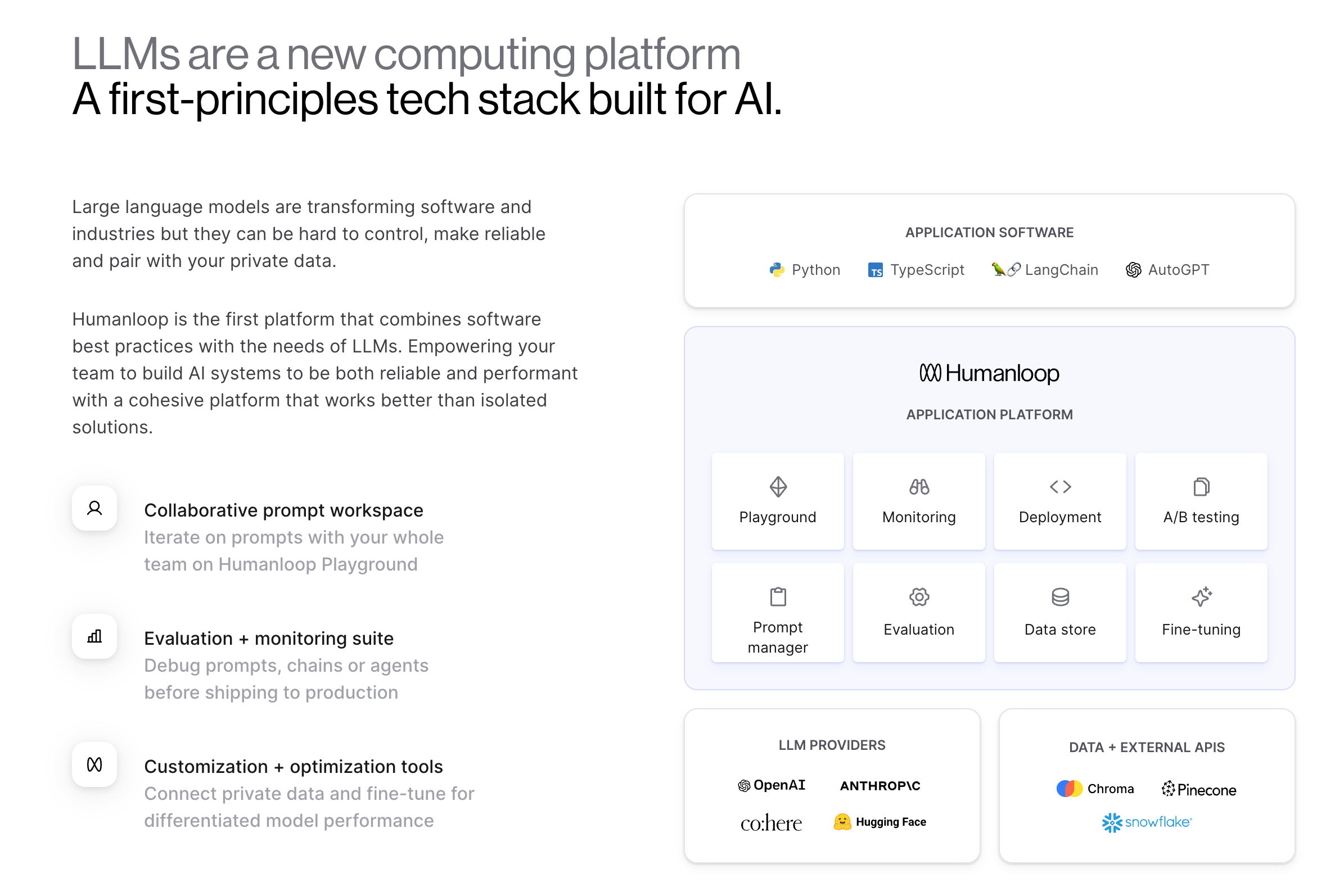

We left a high amount of background audio in the Devday podcast, which many of you loved, but we definitely understand that some of you may have had trouble with it. Listener Klaus Breyer ran it through Auphonic with speech islolation and we figured we’d upload it as a backdated pod for people who prefer this. Of course it means that our speakers sound out of place since they now sound like they are talking loudly in a quiet room. Let us know in the comments what you think?Timestampsthe cleaned part is only part 2:* [00:55:09] Part II: Spot Interviews* [00:55:59] Jim Fan (Nvidia) - High Level Takeaways* [01:05:19] Raza Habib (Humanloop) - Foundation Model Ops* [01:13:32] Surya Dantuluri (Stealth) - RIP Plugins* [01:20:53] Reid Robinson (Zapier) - AI Actions for GPTs* [01:30:45] Div Garg (MultiOn) - GPT4V for Agents* [01:36:42] Louis Knight-Webb (Bloop.ai) - AI Code Search* [01:48:36] Shreya Rajpal (Guardrails) - Guardrails for LLMs* [01:59:00] Alex Volkov (Weights & Biases, ThursdAI) - "Keeping AI Open"* [02:09:39] Rahul Sonwalkar (Julius AI) - Advice for Founders Get full access to Latent Space at www.latent.space/subscribe

02:21:4008/11/2023

AGI is Being Achieved Incrementally (OpenAI DevDay w/ Simon Willison, Alex Volkov, Jim Fan, Raza Habib, Shreya Rajpal, Rahul Ligma, et al)