Sign in

Sign in

Education

Technology

Rob, Luisa, Keiran, and the 80,000 Hours team

Unusually in-depth conversations about the world's most pressing problems and what you can do to solve them.

Subscribe by searching for '80000 Hours' wherever you get podcasts.

Produced by Keiran Harris. Hosted by Rob Wiblin and Luisa Rodriguez.

![#75 – Michelle Hutchinson on what people most often ask 80,000 Hours [re-release] #75 – Michelle Hutchinson on what people most often ask 80,000 Hours [re-release]](https://img.transistor.fm/SuUbKLsjyRh4asrXtN0iYXTY52MDvEVqAH1hrJ72PKo/rs:fill:3000:3000:1/q:60/aHR0cHM6Ly9pbWct/dXBsb2FkLXByb2R1/Y3Rpb24udHJhbnNp/c3Rvci5mbS9lcGlz/b2RlLzEzMjQ4MzAv/MTY4MzU0NDY1OC1h/cnR3b3JrLmpwZw.jpg)

#75 – Michelle Hutchinson on what people most often ask 80,000 Hours [re-release]

Rebroadcast: this episode was originally released in April 2020.

Since it was founded, 80,000 Hours has done one-on-one calls to supplement our online content and offer more personalised advice. We try to help people get clear on their most plausible paths, the key uncertainties they face in choosing between them, and provide resources, pointers, and introductions to help them in those paths.

I (Michelle Hutchinson) joined the team a couple of years ago after working at Oxford's Global Priorities Institute, and these days I'm 80,000 Hours' Head of Advising. Since then, chatting to hundreds of people about their career plans has given me some idea of the kinds of things it’s useful for people to hear about when thinking through their careers. So we thought it would be useful to discuss some on the show for everyone to hear.

• Links to learn more, summary and full transcript.

• See over 500 vacancies on our job board.

• Apply for one-on-one career advising.

Among other common topics, we cover:

• Why traditional careers advice involves thinking through what types of roles you enjoy followed by which of those are impactful, while we recommend going the other way: ranking roles on impact, and then going down the list to find the one you think you’d most flourish in.

• That if you’re pitching your job search at the right level of role, you’ll need to apply to a large number of different jobs. So it's wise to broaden your options, by applying for both stretch and backup roles, and not over-emphasising a small number of organisations.

• Our suggested process for writing a longer term career plan: 1. shortlist your best medium to long-term career options, then 2. figure out the key uncertainties in choosing between them, and 3. map out concrete next steps to resolve those uncertainties.

• Why many listeners aren't spending enough time finding out about what the day-to-day work is like in paths they're considering, or reaching out to people for advice or opportunities.

• The difficulty of maintaining the ambition to increase your social impact, while also being proud of and motivated by what you're already accomplishing.

I also thought it might be useful to give people a sense of what I do and don’t do in advising calls, to help them figure out if they should sign up for it.

If you’re wondering whether you’ll benefit from advising, bear in mind that it tends to be more useful to people:

1. With similar views to 80,000 Hours on what the world’s most pressing problems are, because we’ve done most research on the problems we think it’s most important to address.

2. Who don’t yet have close connections with people working at effective altruist organisations.

3. Who aren’t strongly locationally constrained.

If you’re unsure, it doesn’t take long to apply, and a lot of people say they find the application form itself helps them reflect on their plans. We’re particularly keen to hear from people from under-represented backgrounds.

Also in this episode:

• I describe mistakes I’ve made in advising, and career changes made by people I’ve spoken with.

• Rob and I argue about what risks to take with your career, like when it’s sensible to take a study break, or start from the bottom in a new career path.

• I try to forecast how I’ll change after I have a baby, Rob speculates wildly on what motherhood is like, and Arden and I mercilessly mock Rob.

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

02:14:5030/12/2020

#89 – Owen Cotton-Barratt on epistemic systems and layers of defense against potential global catastrophes

From one point of view academia forms one big 'epistemic' system — a process which directs attention, generates ideas, and judges which are good. Traditional print media is another such system, and we can think of society as a whole as a huge epistemic system, made up of these and many other subsystems. How these systems absorb, process, combine and organise information will have a big impact on what humanity as a whole ends up doing with itself — in fact, at a broad level it basically entirely determines the direction of the future. With that in mind, today’s guest Owen Cotton-Barratt has founded the Research Scholars Programme (RSP) at the Future of Humanity Institute at Oxford University, which gives early-stage researchers leeway to try to understand how the world works. Links to learn more, summary and full transcript. Instead of you having to pay for a masters degree, the RSP pays *you* to spend significant amounts of time thinking about high-level questions, like "What is important to do?” and “How can I usefully contribute?" Participants get to practice their research skills, while also thinking about research as a process and how research communities can function as epistemic systems that plug into the rest of society as productively as possible. The programme attracts people with several years of experience who are looking to take their existing knowledge — whether that’s in physics, medicine, policy work, or something else — and apply it to what they determine to be the most important topics. It also attracts people without much experience, but who have a lot of ideas. If you went directly into a PhD programme, you might have to narrow your focus quickly. But the RSP gives you time to explore the possibilities, and to figure out the answer to the question “What’s the topic that really matters, and that I’d be happy to spend several years of my life on?” Owen thinks one of the most useful things about the two-year programme is being around other people — other RSP participants, as well as other researchers at the Future of Humanity Institute — who are trying to think seriously about where our civilisation is headed and how to have a positive impact on this trajectory. Instead of being isolated in a PhD, you’re surrounded by folks with similar goals who can push back on your ideas and point out where you’re making mistakes. Saving years not pursuing an unproductive path could mean that you will ultimately have a much bigger impact with your career. RSP applications are set to open in the Spring of 2021 — but Owen thinks it’s helpful for people to think about it in advance. In today’s episode, Arden and Owen mostly talk about Owen’s own research. They cover: • Extinction risk classification and reduction strategies • Preventing small disasters from becoming large disasters • How likely we are to go from being in a collapsed state to going extinct • What most people should do if longtermism is true • Advice for mathematically-minded people • And much more Chapters: • Rob’s intro (00:00:00)• The interview begins (00:02:22)• Extinction risk classification and reduction strategies (00:06:02)• Defense layers (00:16:37)• Preventing small disasters from becoming large disasters (00:23:31)• Risk factors (00:38:57)• How likely are we to go from being in a collapsed state to going extinct? (00:48:02)• Estimating total levels of existential risk (00:54:35)• Everyday longtermism (01:01:35)• What should most people do if longtermism is true? (01:12:18)• 80,000 Hours’ issue with promoting career paths (01:24:12)• The existential risk of making a lot of really bad decisions (01:29:27)• What should longtermists do differently today (01:39:08)• Biggest concerns with this framework (01:51:28)• Research careers (02:04:04)• Being a mathematician (02:13:33)• Advice for mathematically minded people (02:24:30)• Rob’s outro (02:37:32) Producer: Keiran Harris Audio mastering: Ben Cordell Transcript: Zakee Ulhaq

02:38:1217/12/2020

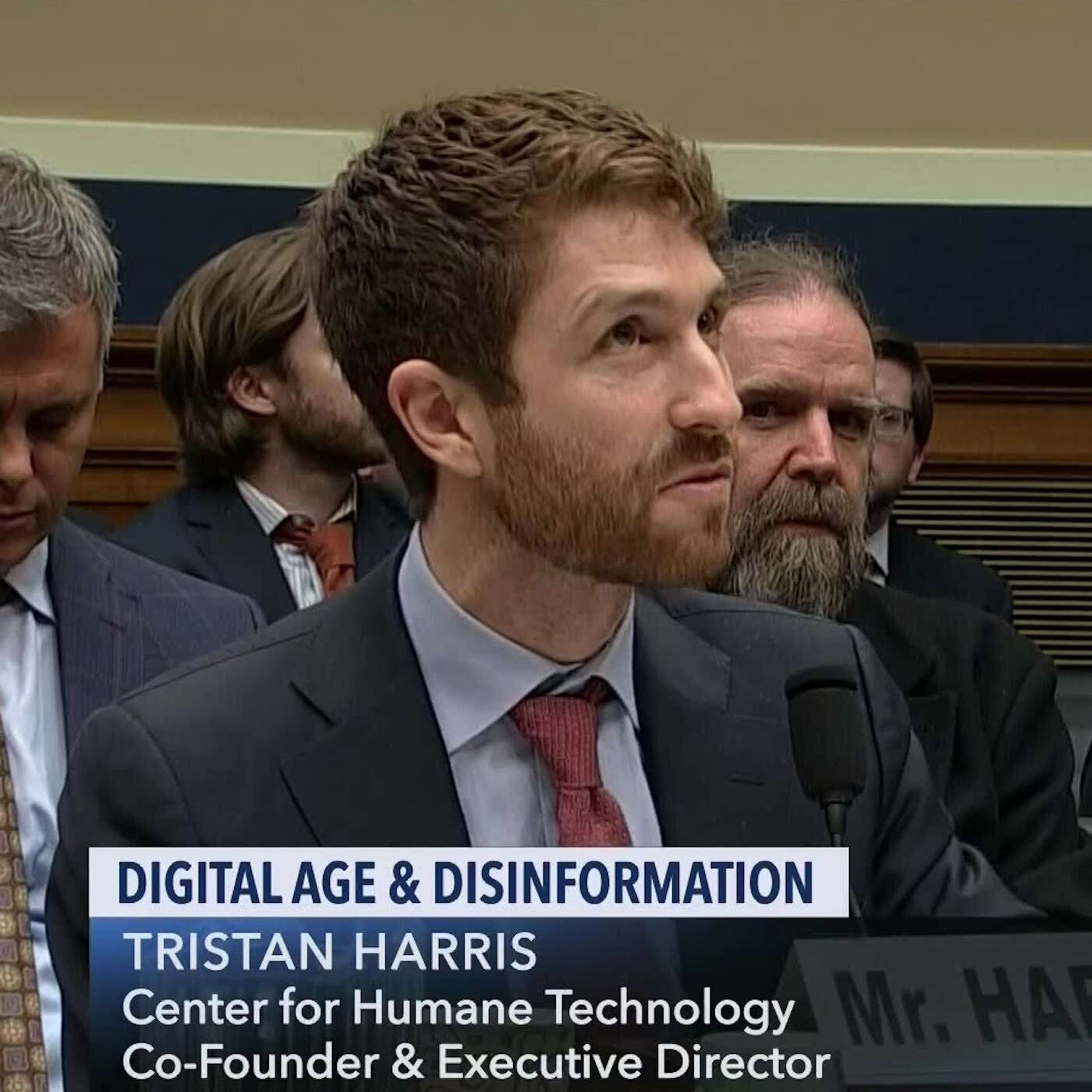

#88 – Tristan Harris on the need to change the incentives of social media companies

In its first 28 days on Netflix, the documentary The Social Dilemma — about the possible harms being caused by social media and other technology products — was seen by 38 million households in about 190 countries and in 30 languages. Over the last ten years, the idea that Facebook, Twitter, and YouTube are degrading political discourse and grabbing and monetizing our attention in an alarming way has gone mainstream to such an extent that it's hard to remember how recently it was a fringe view. It feels intuitively true that our attention spans are shortening, we’re spending more time alone, we’re less productive, there’s more polarization and radicalization, and that we have less trust in our fellow citizens, due to having less of a shared basis of reality. But while it all feels plausible, how strong is the evidence that it's true? In the past, people have worried about every new technological development — often in ways that seem foolish in retrospect. Socrates famously feared that being able to write things down would ruin our memory. At the same time, historians think that the printing press probably generated religious wars across Europe, and that the radio helped Hitler and Stalin maintain power by giving them and them alone the ability to spread propaganda across the whole of Germany and the USSR. Fears about new technologies aren't always misguided. Tristan Harris, leader of the Center for Humane Technology, and co-host of the Your Undivided Attention podcast, is arguably the most prominent person working on reducing the harms of social media, and he was happy to engage with Rob’s good-faith critiques. • Links to learn more, summary and full transcript. • FYI, the 2020 Effective Altruism Survey is closing soon: https://www.surveymonkey.co.uk/r/EAS80K2 Tristan and Rob provide a thorough exploration of the merits of possible concrete solutions – something The Social Dilemma didn’t really address. Given that these companies are mostly trying to design their products in the way that makes them the most money, how can we get that incentive to align with what's in our interests as users and citizens? One way is to encourage a shift to a subscription model. One claim in The Social Dilemma is that the machine learning algorithms on these sites try to shift what you believe and what you enjoy in order to make it easier to predict what content recommendations will keep you on the site. But if you paid a yearly fee to Facebook in lieu of seeing ads, their incentive would shift towards making you as satisfied as possible with their service — even if that meant using it for five minutes a day rather than 50. Despite all the negatives, Tristan doesn’t want us to abandon the technologies he's concerned about. He asks us to imagine a social media environment designed to regularly bring our attention back to what each of us can do to improve our lives and the world. Just as we can focus on the positives of nuclear power while remaining vigilant about the threat of nuclear weapons, we could embrace social media and recommendation algorithms as the largest mass-coordination engine we've ever had — tools that could educate and organise people better than anything that has come before. The tricky and open question is how to get there. Rob and Tristan also discuss: • Justified concerns vs. moral panics • The effect of social media on politics in the US and developing countries • Tips for individuals Chapters:Rob’s intro (00:00:00)The interview begins (00:01:36)Center for Humane Technology (00:04:53)Critics (00:08:19)The Social Dilemma (00:13:20)Three categories of harm (00:20:31)Justified concerns vs. moral panics (00:30:23)The messy real world vs. an imagined idealised world (00:38:20)The persuasion apocalypse (00:47:46)Revolt of the Public (00:56:48)Global effects (01:02:44)US politics (01:13:32)Potential solutions (01:20:59)Unintended consequences (01:42:57)Win-win changes (01:50:47)Big wins over the last 5 or 10 years (01:59:10)The subscription model (02:02:28)Tips for individuals (02:14:05)The current state of the research (02:22:37)Careers (02:26:36)Producer: Keiran Harris.Audio mastering: Ben Cordell.Transcriptions: Sofia Davis-Fogel.

02:35:3903/12/2020

Benjamin Todd on what the effective altruism community most needs (80k team chat #4)

In the last '80k team chat' with Ben Todd and Arden Koehler, we discussed what effective altruism is and isn't, and how to argue for it. In this episode we turn now to what the effective altruism community most needs.

• Links to learn more, summary and full transcript

• The 2020 Effective Altruism Survey just opened. If you're involved with the effective altruism community, or sympathetic to its ideas, it's would be wonderful if you could fill it out: https://www.surveymonkey.co.uk/r/EAS80K2

According to Ben, we can think of the effective altruism movement as having gone through several stages, categorised by what kind of resource has been most able to unlock more progress on important issues (i.e. by what's the 'bottleneck'). Plausibly, these stages are common for other social movements as well.

• Needing money: In the first stage, when effective altruism was just getting going, more money (to do things like pay staff and put on events) was the main bottleneck to making progress.

• Needing talent: In the second stage, we especially needed more talented people being willing to work on whatever seemed most pressing.

• Needing specific skills and capacity: In the third stage, which Ben thinks we're in now, the main bottlenecks are organizational capacity, infrastructure, and management to help train people up, as well as specialist skills that people can put to work now.

What's next? Perhaps needing coordination -- the ability to make sure people keep working efficiently and effectively together as the community grows.

Ben and I also cover the career implications of those stages, as well as the ability to save money and the possibility that someone else would do your job in your absence.

If you’d like to learn more about these topics, you should check out a couple of articles on our site:

• Think twice before talking about ‘talent gaps’ – clarifying nine misconceptions

• How replaceable are the top candidates in large hiring rounds? Why the answer flips depending on the distribution of applicant ability

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

01:25:2112/11/2020

#87 – Russ Roberts on whether it's more effective to help strangers, or people you know

If you want to make the world a better place, would it be better to help your niece with her SATs, or try to join the State Department to lower the risk that the US and China go to war? People involved in 80,000 Hours or the effective altruism community would be comfortable recommending the latter. This week's guest — Russ Roberts, host of the long-running podcast EconTalk, and author of a forthcoming book on decision-making under uncertainty and the limited ability of data to help — worries that might be a mistake. Links to learn more, summary and full transcript. I've been a big fan of Russ' show EconTalk for 12 years — in fact I have a list of my top 100 recommended episodes — so I invited him to talk about his concerns with how the effective altruism community tries to improve the world. These include: • Being too focused on the measurable • Being too confident we've figured out 'the best thing' • Being too credulous about the results of social science or medical experiments • Undermining people's altruism by encouraging them to focus on strangers, who it's naturally harder to care for • Thinking it's possible to predictably help strangers, who you don't understand well enough to know what will truly help • Adding levels of wellbeing across people when this is inappropriate • Encouraging people to pursue careers they won't enjoy These worries are partly informed by Russ' 'classical liberal' worldview, which involves a preference for free market solutions to problems, and nervousness about the big plans that sometimes come out of consequentialist thinking. While we do disagree on a range of things — such as whether it's possible to add up wellbeing across different people, and whether it's more effective to help strangers than people you know — I make the case that some of these worries are founded on common misunderstandings about effective altruism, or at least misunderstandings of what we believe here at 80,000 Hours. We primarily care about making the world a better place over thousands or even millions of years — and we wouldn’t dream of claiming that we could accurately measure the effects of our actions on that timescale. I'm more skeptical of medicine and empirical social science than most people, though not quite as skeptical as Russ (check out this quiz I made where you can guess which academic findings will replicate, and which won't). And while I do think that people should occasionally take jobs they dislike in order to have a social impact, those situations seem pretty few and far between. But Russ and I disagree about how much we really disagree. In addition to all the above we also discuss: • How to decide whether to have kids • Was the case for deworming children oversold? • Whether it would be better for countries around the world to be better coordinated Chapters:Rob’s intro (00:00:00)The interview begins (00:01:48)RCTs and donations (00:05:15)The 80,000 Hours project (00:12:35)Expanding the moral circle (00:28:37)Global coordination (00:39:48)How to act if you're pessimistic about improving the long-term future (00:55:49)Communicating uncertainty (01:03:31)How much to trust empirical research (01:09:19)How to decide whether to have kids (01:24:13)Utilitarianism (01:34:01)Producer: Keiran Harris.Audio mastering: Ben Cordell.Transcriptions: Zakee Ulhaq.

01:49:3603/11/2020

#86 – Hilary Greaves on Pascal's mugging, strong longtermism, and whether existing can be good for us

Had World War 1 never happened, you might never have existed. It’s very unlikely that the exact chain of events that led to your conception would have happened otherwise — so perhaps you wouldn't have been born. Would that mean that it's better for you that World War 1 happened (regardless of whether it was better for the world overall)? On the one hand, if you're living a pretty good life, you might think the answer is yes – you get to live rather than not. On the other hand, it sounds strange to say that it's better for you to be alive, because if you'd never existed there'd be no you to be worse off. But if you wouldn't be worse off if you hadn't existed, can you be better off because you do? In this episode, philosophy professor Hilary Greaves – Director of Oxford University’s Global Priorities Institute – helps untangle this puzzle for us and walks me and Rob through the space of possible answers. She argues that philosophers have been too quick to conclude what she calls existence non-comparativism – i.e, that it can't be better for someone to exist vs. not. Links to learn more, summary and full transcript. Where we come down on this issue matters. If people are not made better off by existing and having good lives, you might conclude that bringing more people into existence isn't better for them, and thus, perhaps, that it's not better at all. This would imply that bringing about a world in which more people live happy lives might not actually be a good thing (if the people wouldn't otherwise have existed) — which would affect how we try to make the world a better place. Those wanting to have children in order to give them the pleasure of a good life would in some sense be mistaken. And if humanity stopped bothering to have kids and just gradually died out we would have no particular reason to be concerned. Furthermore it might mean we should deprioritise issues that primarily affect future generations, like climate change or the risk of humanity accidentally wiping itself out. This is our second episode with Professor Greaves. The first one was a big hit, so we thought we'd come back and dive into even more complex ethical issues. We discuss: • The case for different types of ‘strong longtermism’ — the idea that we ought morally to try to make the very long run future go as well as possible • What it means for us to be 'clueless' about the consequences of our actions • Moral uncertainty -- what we should do when we don't know which moral theory is correct • Whether we should take a bet on a really small probability of a really great outcome • The field of global priorities research at the Global Priorities Institute and beyondChapters:The interview begins (00:02:53)The Case for Strong Longtermism (00:05:49)Compatible moral views (00:20:03)Defining cluelessness (00:39:26)Why cluelessness isn’t an objection to longtermism (00:51:05)Theories of what to do under moral uncertainty (01:07:42)Pascal’s mugging (01:16:37)Comparing Existence and Non-Existence (01:30:58)Philosophers who reject existence comparativism (01:48:56)Lives framework (02:01:52)Global priorities research (02:09:25) Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript. Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

02:24:5421/10/2020

Benjamin Todd on the core of effective altruism and how to argue for it (80k team chat #3)

Today’s episode is the latest conversation between Arden Koehler, and our CEO, Ben Todd.

Ben’s been thinking a lot about effective altruism recently, including what it really is, how it's framed, and how people misunderstand it.

We recently released an article on misconceptions about effective altruism – based on Will MacAskill’s recent paper The Definition of Effective Altruism – and this episode can act as a companion piece.

Links to learn more, summary and full transcript.

Arden and Ben cover a bunch of topics related to effective altruism:

• How it isn’t just about donating money to fight poverty

• Whether it includes a moral obligation to give

• The rigorous argument for its importance

• Objections to that argument

• How to talk about effective altruism for people who aren't already familiar with it

Given that we’re in the same office, it’s relatively easy to record conversations between two 80k team members — so if you enjoy these types of bonus episodes, let us know at [email protected], and we might make them a more regular feature.

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

01:24:0722/09/2020

Ideas for high impact careers beyond our priority paths (Article)

Today’s release is the latest in our series of audio versions of our articles.

In this one, we go through some more career options beyond our priority paths that seem promising to us for positively influencing the long-term future.

Some of these are likely to be written up as priority paths in the future, or wrapped into existing ones, but we haven’t written full profiles for them yet—for example policy careers outside AI and biosecurity policy that seem promising from a longtermist perspective.

Others, like information security, we think might be as promising for many people as our priority paths, but because we haven’t investigated them much we’re still unsure.

Still others seem like they’ll typically be less impactful than our priority paths for people who can succeed equally in either, but still seem high-impact to us and like they could be top options for a substantial number of people, depending on personal fit—for example research management.

Finally some—like becoming a public intellectual—clearly have the potential for a lot of impact, but we can’t recommend them widely because they don’t have the capacity to absorb a large number of people, are particularly risky, or both.

If you want to check out the links in today’s article, you can find those here.

Our annual user survey is also now open for submissions.

Once a year for two weeks we ask all of you, our podcast listeners, article readers, advice receivers, and so on, so let us know how we've helped or hurt you.

80,000 Hours now offers many different services, and your feedback helps us figure out which programs to keep, which to cut, and which to expand.

This year we have a new section covering the podcast, asking what kinds of episodes you liked the most and want to see more of, what extra resources you use, and some other questions too.

We're always especially interested to hear ways that our work has influenced what you plan to do with your life or career, whether that impact was positive, neutral, or negative.

That might be a different focus in your existing job, or a decision to study something different or look for a new job. Alternatively, maybe you're now planning to volunteer somewhere, or donate more, or donate to a different organisation.

Your responses to the survey will be carefully read as part of our upcoming annual review, and we'll use them to help decide what 80,000 Hours should do differently next year.

So please do take a moment to fill out the user survey before it closes on Sunday (13th of September).

You can find it at 80000hours.org/survey

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

27:5407/09/2020

Benjamin Todd on varieties of longtermism and things 80,000 Hours might be getting wrong (80k team chat #2)

Today’s bonus episode is a conversation between Arden Koehler, and our CEO, Ben Todd.

Ben’s been doing a bunch of research recently, and we thought it’d be interesting to hear about how he’s currently thinking about a couple of different topics – including different types of longtermism, and things 80,000 Hours might be getting wrong.

Links to learn more, summary and full transcript.

This is very off-the-cut compared to our regular episodes, and just 54 minutes long.

In the first half, Arden and Ben talk about varieties of longtermism:

• Patient longtermism

• Broad urgent longtermism

• Targeted urgent longtermism focused on existential risks

• Targeted urgent longtermism focused on other trajectory changes

• And their distinctive implications for people trying to do good with their careers.

In the second half, they move on to:

• How to trade-off transferable versus specialist career capital

• How much weight to put on personal fit

• Whether we might be highlighting the wrong problems and career paths.

Given that we’re in the same office, it’s relatively easy to record conversations between two 80k team members — so if you enjoy these types of bonus episodes, let us know at [email protected], and we might make them a more regular feature.

Our annual user survey is also now open for submissions.

Once a year for two weeks we ask all of you, our podcast listeners, article readers, advice receivers, and so on, so let us know how we've helped or hurt you.

80,000 Hours now offers many different services, and your feedback helps us figure out which programs to keep, which to cut, and which to expand.

This year we have a new section covering the podcast, asking what kinds of episodes you liked the most and want to see more of, what extra resources you use, and some other questions too.

We're always especially interested to hear ways that our work has influenced what you plan to do with your life or career, whether that impact was positive, neutral, or negative.

That might be a different focus in your existing job, or a decision to study something different or look for a new job. Alternatively, maybe you're now planning to volunteer somewhere, or donate more, or donate to a different organisation.

Your responses to the survey will be carefully read as part of our upcoming annual review, and we'll use them to help decide what 80,000 Hours should do differently next year.

So please do take a moment to fill out the user survey.

You can find it at 80000hours.org/survey

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

57:5101/09/2020

Global issues beyond 80,000 Hours’ current priorities (Article)

Today’s release is the latest in our series of audio versions of our articles.

In this one, we go through 30 global issues beyond the ones we usually prioritize most highly in our work, and that you might consider focusing your career on tackling.

Although we spend the majority of our time at 80,000 Hours on our highest priority problem areas, and we recommend working on them to many of our readers, these are just the most promising issues among those we’ve spent time investigating. There are many other global issues that we haven’t properly investigated, and which might be very promising for more people to work on.

In fact, we think working on some of the issues in this article could be as high-impact for some people as working on our priority problem areas — though we haven’t looked into them enough to be confident.

If you want to check out the links in today’s article, you can find those here.

Our annual user survey is also now open for submissions.

Once a year for two weeks we ask all of you, our podcast listeners, article readers, advice receivers, and so on, so let us know how we've helped or hurt you.

80,000 Hours now offers many different services, and your feedback helps us figure out which programs to keep, which to cut, and which to expand.

This year we have a new section covering the podcast, asking what kinds of episodes you liked the most and want to see more of, what extra resources you use, and some other questions too.

We're always especially interested to hear ways that our work has influenced what you plan to do with your life or career, whether that impact was positive, neutral, or negative.

That might be a different focus in your existing job, or a decision to study something different or look for a new job. Alternatively, maybe you're now planning to volunteer somewhere, or donate more, or donate to a different organisation.

Your responses to the survey will be carefully read as part of our upcoming annual review, and we'll use them to help decide what 80,000 Hours should do differently next year.

So please do take a moment to fill out the user survey.

You can find it at 80000hours.org/survey

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

32:5428/08/2020

#85 - Mark Lynas on climate change, societal collapse & nuclear energy

A golf-ball sized lump of uranium can deliver more than enough power to cover all of your lifetime energy use. To get the same energy from coal, you’d need 3,200 tonnes of black rock — a mass equivalent to 800 adult elephants, which would produce more than 11,000 tonnes of CO2. That’s about 11,000 tonnes more than the uranium.

Many people aren’t comfortable with the danger posed by nuclear power. But given the climatic stakes, it’s worth asking: Just how much more dangerous is it compared to fossil fuels?

According to today’s guest, Mark Lynas — author of Six Degrees: Our Future on a Hotter Planet (winner of the prestigious Royal Society Prizes for Science Books) and Nuclear 2.0 — it’s actually much, much safer.

Links to learn more, summary and full transcript.

Climatologists James Hansen and Pushker Kharecha calculated that the use of nuclear power between 1971 and 2009 avoided the premature deaths of 1.84 million people by avoiding air pollution from burning coal.

What about radiation or nuclear disasters? According to Our World In Data, in generating a given amount of electricity, nuclear, wind, and solar all cause about the same number of deaths — and it's a tiny number.

So what’s going on? Why isn’t everyone demanding a massive scale-up of nuclear energy to save lives and stop climate change? Mark and many other activists believe that unchecked climate change will result in the collapse of human civilization, so the stakes could not be higher.

Mark says that many environmentalists — including him — simply grew up with anti-nuclear attitudes all around them (possibly stemming from a conflation of nuclear weapons and nuclear energy) and haven't thought to question them.

But he thinks that once you believe in the climate emergency, you have to rethink your opposition to nuclear energy.

At 80,000 Hours we haven’t analysed the merits and flaws of the case for nuclear energy — especially compared to wind and solar paired with gas, hydro, or battery power to handle intermittency — but Mark is convinced.

He says it comes down to physics: Nuclear power is just so much denser.

We need to find an energy source that provides carbon-free power to ~10 billion people, and we need to do it while humanity is doubling or tripling (or more) its energy demand.

How do you do that without destroying the world's ecology? Mark thinks that nuclear is the only way.

Read a more in-depth version of the case for nuclear energy in the full blog post.

For Mark, the only argument against nuclear power is a political one -- that people won't want or accept it.

He says that he knows people in all kinds of mainstream environmental groups — such as Greenpeace — who agree that nuclear must be a vital part of any plan to solve climate change. But, because they think they'll be ostracized if they speak up, they keep their mouths shut.

Mark thinks this willingness to indulge beliefs that contradict scientific evidence stands in the way of actually fully addressing climate change, and so he’s helping to build a movement of folks who are out and proud about their support for nuclear energy.

This is only one topic of many in today’s interview. Arden, Rob, and Mark also discuss:

• At what degrees of warming does societal collapse become likely

• Whether climate change could lead to human extinction

• What environmentalists are getting wrong about climate change

• And much more.

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

02:08:2620/08/2020

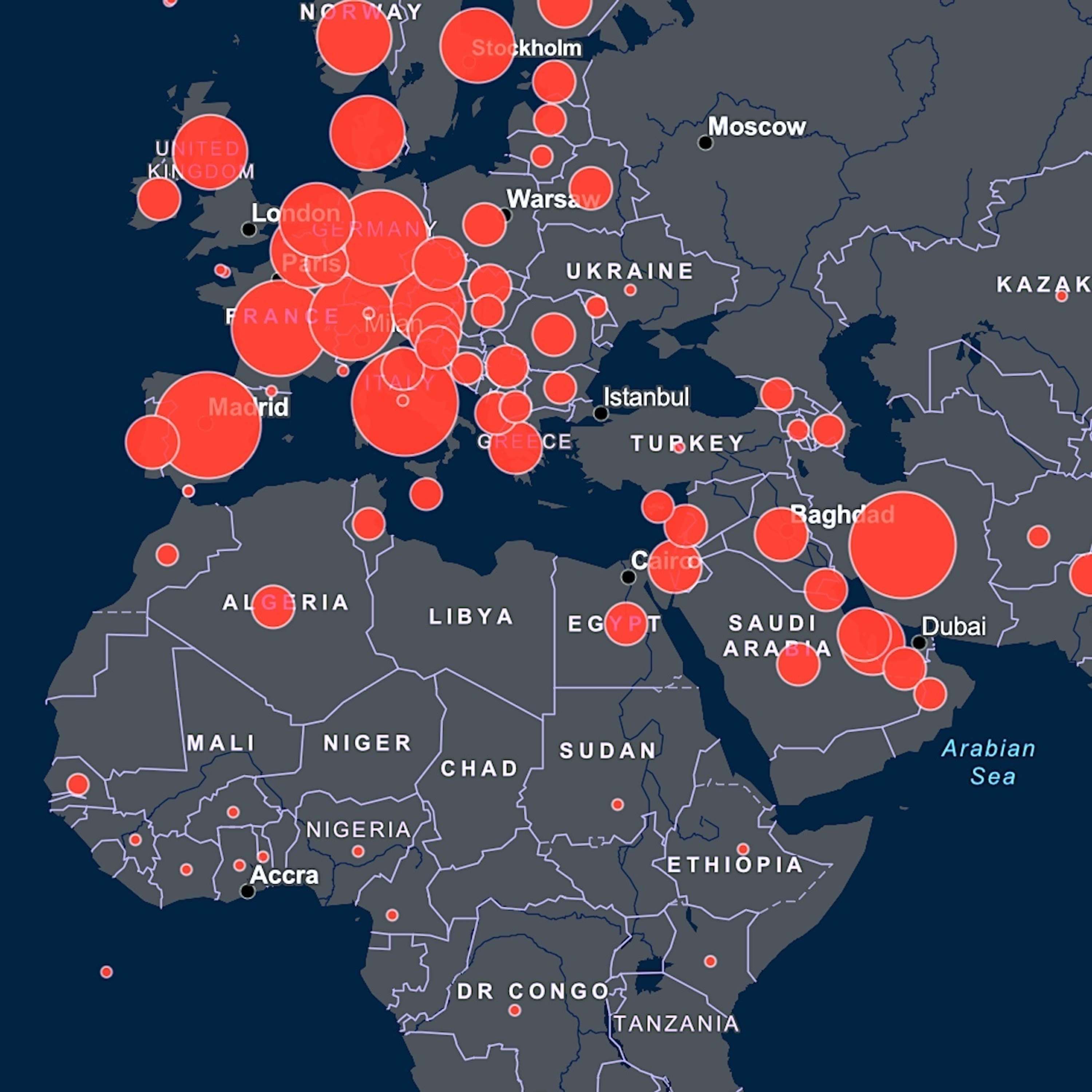

#84 – Shruti Rajagopalan on what India did to stop COVID-19 and how well it worked

When COVID-19 struck the US, everyone was told that hand sanitizer needed to be saved for healthcare professionals, so they should just wash their hands instead. But in India, many homes lack reliable piped water, so they had to do the opposite: distribute hand sanitizer as widely as possible. American advocates for banning single-use plastic straws might be outraged at the widespread adoption of single-use hand sanitizer sachets in India. But the US and India are very different places, and it might be the only way out when you're facing a pandemic without running water. According to today’s guest, Shruti Rajagopalan, Senior Research Fellow at the Mercatus Center at George Mason University, that's typical and context is key to policy-making. This prompted Shruti to propose a set of policy responses designed for India specifically back in April. Unfortunately she thinks it's surprisingly hard to know what one should and shouldn't imitate from overseas. Links to learn more, summary and full transcript. For instance, some places in India installed shared handwashing stations in bus stops and train stations, which is something no developed country would advise. But in India, you can't necessarily wash your hands at home — so shared faucets might be the lesser of two evils. (Though note scientists have downgraded the importance of hand hygiene lately.) Stay-at-home orders offer a more serious example. Developing countries find themselves in a serious bind that rich countries do not. With nearly no slack in healthcare capacity, India lacks equipment to treat even a small number of COVID-19 patients. That suggests strict controls on movement and economic activity might be necessary to control the pandemic. But many people in India and elsewhere can't afford to shelter in place for weeks, let alone months. And governments in poorer countries may not be able to afford to send everyone money — even where they have the infrastructure to do so fast enough. India ultimately did impose strict lockdowns, lasting almost 70 days, but the human toll has been larger than in rich countries, with vast numbers of migrant workers stranded far from home with limited if any income support. There were no trains or buses, and the government made no provision to deal with the situation. Unable to afford rent where they were, many people had to walk hundreds of kilometers to reach home, carrying children and belongings with them. But in some other ways the context of developing countries is more promising. In the US many people melted down when asked to wear facemasks. But in South Asia, people just wore them. Shruti isn’t sure whether that's because of existing challenges with high pollution, past experiences with pandemics, or because intergenerational living makes the wellbeing of others more salient, but the end result is that masks weren’t politicised in the way they were in the US. In addition, despite the suffering caused by India's policy response to COVID-19, public support for the measures and the government remains high — and India's population is much younger and so less affected by the virus. In this episode, Howie and Shruti explore the unique policy challenges facing India in its battle with COVID-19, what they've tried to do, and how it has gone. They also cover: • What an economist can bring to the table during a pandemic • The mystery of India’s surprisingly low mortality rate • Policies that should be implemented today • What makes a good constitution Chapters: • Rob’s intro (00:00:00)• The interview begins (00:02:27)• What an economist can bring to the table for COVID-19 (00:07:54)• What India has done about the coronavirus (00:12:24)• Why it took so long for India to start seeing a lot of cases (00:25:08)• How India is doing at the moment with COVID-19 (00:27:55)• Is the mortality rate surprisingly low in India? (00:40:32)• Why Southeast Asians countries have done so well so far (00:55:43)• Different attitudes to masks globally (00:59:25)• Differences in policy approaches for developing countries (01:07:27)• India’s strict lockdown (01:25:56)• Lockdown for the average rural Indian (01:39:11)• Public reaction to the lockdown in India (01:44:39)• Policies that should be implemented today (01:50:29)• India’s overall reaction to COVID-19 (01:57:23)• Constitutional economics (02:03:28)• What makes a good constitution (02:11:47)• Emergent Ventures (02:27:34)• Careers (02:47:57)• Rob’s outro (02:57:51) Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

02:58:1413/08/2020

#83 - Jennifer Doleac on preventing crime without police and prisons

The killing of George Floyd has prompted a great deal of debate over whether the US should reduce the size of its police departments. The research literature suggests that the presence of police officers does reduce crime, though they're expensive and as is increasingly recognised, impose substantial harms on the populations they are meant to be protecting, especially communities of colour. So maybe we ought to shift our focus to effective but unconventional approaches to crime prevention, approaches that don't require police or prisons and the human toll they bring with them. Today’s guest, Jennifer Doleac — Associate Professor of Economics at Texas A&M University, and Director of the Justice Tech Lab — is an expert on empirical research into policing, law and incarceration. In this extensive interview, she highlights three alternative ways to effectively prevent crime: better street lighting, cognitive behavioral therapy, and lead reduction. One of Jennifer’s papers used switches into and out of daylight saving time as a 'natural experiment' to measure the effect of light levels on crime. One day the sun sets at 5pm; the next day it sets at 6pm. When that evening hour is dark instead of light, robberies during it roughly double. Links to sources for the claims in these show notes, other resources to learn more, and a full transcript. The idea here is that if you try to rob someone in broad daylight, they might see you coming, and witnesses might later be able to identify you. You're just more likely to get caught. You might think: "Well, people will just commit crime in the morning instead". But it looks like criminals aren’t early risers, and that doesn’t happen. On her unusually rigorous podcast Probable Causation, Jennifer spoke to one of the authors of a related study, in which very bright streetlights were randomly added to some public housing complexes but not others. They found the lights reduced outdoor night-time crime by 36%, at little cost. The next best thing to sun-light is human-light, so just installing more streetlights might be one of the easiest ways to cut crime, without having to hassle or punish anyone. The second approach is cognitive behavioral therapy (CBT), in which you're taught to slow down your decision-making, and think through your assumptions before acting. There was a randomised controlled trial done in schools, as well as juvenile detention facilities in Chicago, where the kids assigned to get CBT were followed over time and compared with those who were not assigned to receive CBT. They found the CBT course reduced rearrest rates by a third, and lowered the likelihood of a child returning to a juvenile detention facility by 20%. Jennifer says that the program isn’t that expensive, and the benefits are massive. Everyone would probably benefit from being able to talk through their problems but the gains are especially large for people who've grown up with the trauma of violence in their lives. Finally, Jennifer thinks that lead reduction might be the best buy of all in crime prevention… Blog post truncated due to length limits. Finish reading the full post here. In today’s conversation, Rob and Jennifer also cover, among many other things: • Misconduct, hiring practices and accountability among US police • Procedural justice training • Overrated policy ideas • Policies to try to reduce racial discrimination • The effects of DNA databases • Diversity in economics • The quality of social science research Get this episode by subscribing: type 80,000 Hours into your podcasting app. Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

02:23:0331/07/2020

#82 – James Forman Jr on reducing the cruelty of the US criminal legal system

No democracy has ever incarcerated as many people as the United States. To get its incarceration rate down to the global average, the US would have to release 3 in 4 people in its prisons today. The effects on Black Americans have been especially severe — Black people make up 12% of the US population but 33% of its prison population. In the early 2000's when incarceration reached its peak, the US government estimated that 32% of Black boys would go to prison at some point in their lives, 5.5 times the figure for whites. Contrary to popular understanding, nonviolent drug offenders make up less than a fifth of the incarcerated population. The only way to get its incarceration rate near the global average will be to shorten prison sentences for so-called 'violent criminals' — a politically toxic idea. But could we change that? According to today’s guest, Professor James Forman Jr — a former public defender in Washington DC, Pulitzer Prize-winning author of Locking Up Our Own: Crime and Punishment in Black America, and now a professor at Yale Law School — there are two things we have to do to make that happen. Links to learn more, summary and full transcript. First, he thinks we should lose the term 'violent offender', and maybe even 'violent crime'. When you say 'violent crime', most people immediately think of murder and rape — but they're only a small fraction of the crimes that the law deems as violent. In reality, the crime that puts the most people in prison in the US is robbery. And the law says that robbery is a violent crime whether a weapon is involved or not. By moving away from the catch-all category of 'violent criminals' we can judge the risk posed by individual people more sensibly. Second, he thinks we should embrace the restorative justice movement. Instead of asking "What was the law? Who broke it? What should the punishment be", restorative justice asks "Who was harmed? Who harmed them? And what can we as a society, including the person who committed the harm, do to try to remedy that harm?" Instead of being narrowly focused on how many years people should spend in prison as retribution, it starts a different conversation. You might think this apparently softer approach would be unsatisfying to victims of crime. But James has discovered that a lot of victims of crime find that the current system doesn't help them in any meaningful way. What they primarily want to know is: why did this happen to me? The best way to find that out is to actually talk to the person who harmed them, and in doing so gain a better understanding of the underlying factors behind the crime. The restorative justice approach facilitates these conversations in a way the current system doesn't allow, and can include restitution, apologies, and face-to-face reconciliation. That’s just one topic of many covered in today’s episode, with much of the conversation focusing on Professor Forman’s 2018 book Locking Up Our Own — an examination of the historical roots of contemporary criminal justice practices in the US, and his experience setting up a charter school for at-risk youth in DC. Chapters:Rob’s intro (00:00:00)The interview begins (00:02:02)How did we get here? (00:04:07)The role racism plays in policing today (00:14:47)Black American views on policing and criminal justice (00:22:37)Has the core argument of the book been controversial? (00:31:51)The role that class divisions played in forming the current legal system (00:37:33)What are the biggest problems today? (00:40:56)What changes in policy would make the biggest difference? (00:52:41)Shorter sentences for violent crimes (00:58:26)Important recent successes (01:08:21)What can people actually do to help? (01:14:38) Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript. Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

01:28:0827/07/2020

#81 - Ben Garfinkel on scrutinising classic AI risk arguments

80,000 Hours, along with many other members of the effective altruism movement, has argued that helping to positively shape the development of artificial intelligence may be one of the best ways to have a lasting, positive impact on the long-term future. Millions of dollars in philanthropic spending, as well as lots of career changes, have been motivated by these arguments.

Today’s guest, Ben Garfinkel, Research Fellow at Oxford’s Future of Humanity Institute, supports the continued expansion of AI safety as a field and believes working on AI is among the very best ways to have a positive impact on the long-term future. But he also believes the classic AI risk arguments have been subject to insufficient scrutiny given this level of investment.

In particular, the case for working on AI if you care about the long-term future has often been made on the basis of concern about AI accidents; it’s actually quite difficult to design systems that you can feel confident will behave the way you want them to in all circumstances.

Nick Bostrom wrote the most fleshed out version of the argument in his book, Superintelligence. But Ben reminds us that, apart from Bostrom’s book and essays by Eliezer Yudkowsky, there's very little existing writing on existential accidents.

Links to learn more, summary and full transcript.

There have also been very few skeptical experts that have actually sat down and fully engaged with it, writing down point by point where they disagree or where they think the mistakes are. This means that Ben has probably scrutinised classic AI risk arguments as carefully as almost anyone else in the world.

He thinks that most of the arguments for existential accidents often rely on fuzzy, abstract concepts like optimisation power or general intelligence or goals, and toy thought experiments. And he doesn’t think it’s clear we should take these as a strong source of evidence.

Ben’s also concerned that these scenarios often involve massive jumps in the capabilities of a single system, but it's really not clear that we should expect such jumps or find them plausible.

These toy examples also focus on the idea that because human preferences are so nuanced and so hard to state precisely, it should be quite difficult to get a machine that can understand how to obey them.

But Ben points out that it's also the case in machine learning that we can train lots of systems to engage in behaviours that are actually quite nuanced and that we can't specify precisely. If AI systems can recognise faces from images, and fly helicopters, why don’t we think they’ll be able to understand human preferences?

Despite these concerns, Ben is still fairly optimistic about the value of working on AI safety or governance.

He doesn’t think that there are any slam-dunks for improving the future, and so the fact that there are at least plausible pathways for impact by working on AI safety and AI governance, in addition to it still being a very neglected area, puts it head and shoulders above most areas you might choose to work in.

This is the second episode hosted by our Strategy Advisor Howie Lempel, and he and Ben cover, among many other things:

• The threat of AI systems increasing the risk of permanently damaging conflict or collapse

• The possibility of permanently locking in a positive or negative future

• Contenders for types of advanced systems

• What role AI should play in the effective altruism portfolio

Get this episode by subscribing: type 80,000 Hours into your podcasting app. Or read the linked transcript.

Producer: Keiran Harris.

Audio mastering: Ben Cordell.

Transcriptions: Zakee Ulhaq.

02:38:2809/07/2020

Advice on how to read our advice (Article)

This is the fourth release in our new series of audio articles. If you want to read the original article or check out the links within it, you can find them here.

"We’ve found that readers sometimes interpret or apply our advice in ways we didn’t anticipate and wouldn’t exactly recommend. That’s hard to avoid when you’re writing for a range of people with different personalities and initial views.

To help get on the same page, here’s some advice about our advice, for those about to launch into reading our site.

We want our writing to inform people’s views, but only in proportion to the likelihood that we’re actually right. So we need to make sure you have a balanced perspective on how compelling the evidence is for the different claims we make on the site, and how much weight to put on our advice in your situation.

This piece includes a list of points to bear in mind when reading our site, and some thoughts on how to avoid the communication problems we face..."

As the title suggests, this was written with our web site content in mind, but plenty of it applies to the careers sections of the podcast too — as well as our bonus episodes with members of the 80,000 Hours team, such as Arden and Rob’s episode on demandingness, work-life balance and injustice, which aired on February 25th of this year.

And if you have feedback on these, positive or negative, it’d be great if you could email us at [email protected].

15:2329/06/2020

#80 – Stuart Russell on why our approach to AI is broken and how to fix it

Stuart Russell, Professor at UC Berkeley and co-author of the most popular AI textbook, thinks the way we approach machine learning today is fundamentally flawed. In his new book, Human Compatible, he outlines the 'standard model' of AI development, in which intelligence is measured as the ability to achieve some definite, completely-known objective that we've stated explicitly. This is so obvious it almost doesn't even seem like a design choice, but it is. Unfortunately there's a big problem with this approach: it's incredibly hard to say exactly what you want. AI today lacks common sense, and simply does whatever we've asked it to. That's true even if the goal isn't what we really want, or the methods it's choosing are ones we would never accept. We already see AIs misbehaving for this reason. Stuart points to the example of YouTube's recommender algorithm, which reportedly nudged users towards extreme political views because that made it easier to keep them on the site. This isn't something we wanted, but it helped achieve the algorithm's objective: maximise viewing time. Like King Midas, who asked to be able to turn everything into gold but ended up unable to eat, we get too much of what we've asked for. Links to learn more, summary and full transcript. This 'alignment' problem will get more and more severe as machine learning is embedded in more and more places: recommending us news, operating power grids, deciding prison sentences, doing surgery, and fighting wars. If we're ever to hand over much of the economy to thinking machines, we can't count on ourselves correctly saying exactly what we want the AI to do every time. Stuart isn't just dissatisfied with the current model though, he has a specific solution. According to him we need to redesign AI around 3 principles: 1. The AI system's objective is to achieve what humans want. 2. But the system isn't sure what we want. 3. And it figures out what we want by observing our behaviour. Stuart thinks this design architecture, if implemented, would be a big step forward towards reliably beneficial AI. For instance, a machine built on these principles would be happy to be turned off if that's what its owner thought was best, while one built on the standard model should resist being turned off because being deactivated prevents it from achieving its goal. As Stuart says, "you can't fetch the coffee if you're dead." These principles lend themselves towards machines that are modest and cautious, and check in when they aren't confident they're truly achieving what we want. We've made progress toward putting these principles into practice, but the remaining engineering problems are substantial. Among other things, the resulting AIs need to be able to interpret what people really mean to say based on the context of a situation. And they need to guess when we've rejected an option because we've considered it and decided it's a bad idea, and when we simply haven't thought about it at all. Stuart thinks all of these problems are surmountable, if we put in the work. The harder problems may end up being social and political. When each of us can have an AI of our own — one smarter than any person — how do we resolve conflicts between people and their AI agents? And if AIs end up doing most work that people do today, how can humans avoid becoming enfeebled, like lazy children tended to by machines, but not intellectually developed enough to know what they really want?Chapters:Rob’s intro (00:00:00)The interview begins (00:19:06)Human Compatible: Artificial Intelligence and the Problem of Control (00:21:27)Principles for Beneficial Machines (00:29:25)AI moral rights (00:33:05)Humble machines (00:39:35)Learning to predict human preferences (00:45:55)Animals and AI (00:49:33)Enfeeblement problem (00:58:21)Counterarguments (01:07:09)Orthogonality thesis (01:24:25)Intelligence explosion (01:29:15)Policy ideas (01:38:39)What most needs to be done (01:50:14)Producer: Keiran Harris.Audio mastering: Ben Cordell.Transcriptions: Zakee Ulhaq.

02:13:1722/06/2020

What anonymous contributors think about important life and career questions (Article)

Today we’re launching the final entry of our ‘anonymous answers' series on the website.

It features answers to 23 different questions including “How have you seen talented people fail in their work?” and “What’s one way to be successful you don’t think people talk about enough?”, from anonymous people whose work we admire.

We thought a lot of the responses were really interesting; some were provocative, others just surprising. And as intended, they span a very wide range of opinions.

So we decided to share some highlights here with you podcast subscribers. This is only a sample though, including a few answers from just 10 of those 23 questions.

You can find the rest of the answers at 80000hours.org/anonymous or follow a link here to an individual entry:

1. What's good career advice you wouldn’t want to have your name on?

2. How have you seen talented people fail in their work?

3. What’s the thing people most overrate in their career?

4. If you were at the start of your career again, what would you do differently this time?

5. If you're a talented young person how risk averse should you be?

6. Among people trying to improve the world, what are the bad habits you see most often?

7. What mistakes do people most often make when deciding what work to do?

8. What's one way to be successful you don't think people talk about enough?

9. How honest & candid should high-profile people really be?

10. What’s some underrated general life advice?

11. Should the effective altruism community grow faster or slower? And should it be broader, or narrower?

12. What are the biggest flaws of 80,000 Hours?

13. What are the biggest flaws of the effective altruism community?

14. How should the effective altruism community think about diversity?

15. Are there any myths that you feel obligated to support publicly? And five other questions.

Finally, if you’d like us to produce more or less content like this, please let us know your opinion [email protected].

37:1005/06/2020

#79 – A.J. Jacobs on radical honesty, following the whole Bible, and reframing global problems as puzzles

Today’s guest, New York Times bestselling author A.J. Jacobs, always hated Judge Judy. But after he found out that she was his seventh cousin, he thought, "You know what? She's not so bad." Hijacking this bias towards family and trying to broaden it to everyone led to his three-year adventure to help build the biggest family tree in history. He’s also spent months saying whatever was on his mind, tried to become the healthiest person in the world, read 33,000 pages of facts, spent a year following the Bible literally, thanked everyone involved in making his morning cup of coffee, and tried to figure out how to do the most good. His next book will ask: if we reframe global problems as puzzles, would the world be a better place? Links to learn more, summary and full transcript. This is the first time I’ve hosted the podcast, and I’m hoping to convince people to listen with this attempt at clever show notes that change style each paragraph to reference different A.J. experiments. I don’t actually think it’s that clever, but all of my other ideas seemed worse. I really have no idea how people will react to this episode; I loved it, but I definitely think I’m more entertaining than almost anyone else will. (Radical Honesty.) We do talk about some useful stuff — one of which is the concept of micro goals. When you wake up in the morning, just commit to putting on your workout clothes. Once they’re on, maybe you’ll think that you might as well get on the treadmill — just for a minute. And once you’re on for 1 minute, you’ll often stay on for 20. So I’m not asking you to commit to listening to the whole episode — just to put on your headphones. (Drop Dead Healthy.) Another reason to listen is for the facts:The Bayer aspirin company invented heroin as a cough suppressantCoriander is just the British way of saying cilantroDogs have a third eyelid to protect the eyeball from irritantsA.J. read all 44 million words of the Encyclopedia Britannica from A to Z, which drove home the idea that we know so little about the world (although he does now know that opossums have 13 nipples) (The Know-It-All.)One extra argument for listening: If you interpret the second commandment literally, then it tells you not to make a likeness of anything in heaven, on earth, or underwater — which rules out basically all images. That means no photos, no TV, no movies. So, if you want to respect the Bible, you should definitely consider making podcasts your main source of entertainment (as long as you’re not listening on the Sabbath). (The Year of Living Biblically.) I’m so thankful to A.J. for doing this. But I also want to thank Julie, Jasper, Zane and Lucas who allowed me to spend the day in their home; the construction worker who told me how to get to my subway platform on the morning of the interview; and Queen Jadwiga for making bagels popular in the 1300s, which kept me going during the recording. (Thanks a Thousand.) We also discuss: • Blackmailing yourself • The most extreme ideas A.J.’s ever considered • Doing good as a writer • And much more.Chapters:Rob’s intro (00:00:00)The interview begins (00:01:51)Puzzles (00:05:41)Radical honesty (00:12:18)The Year of Living Biblically (00:24:17)Thanks A Thousand (00:38:04)Drop Dead Healthy (00:49:22)Blackmailing yourself (00:57:46)The Know-It-All (01:03:00)Effective altruism (01:31:38)Longtermism (01:55:35)It’s All Relative (02:01:00)Journalism (02:10:06)Writing careers (02:17:15)Rob’s outro (02:34:37)Producer: Keiran HarrisAudio mastering: Ben CordellTranscriptions: Zakee Ulhaq

02:38:4701/06/2020

#78 – Danny Hernandez on forecasting and the drivers of AI progress

Companies use about 300,000 times more computation training the best AI systems today than they did in 2012 and algorithmic innovations have also made them 25 times more efficient at the same tasks.These are the headline results of two recent papers — AI and Compute and AI and Efficiency — from the Foresight Team at OpenAI. In today's episode I spoke with one of the authors, Danny Hernandez, who joined OpenAI after helping develop better forecasting methods at Twitch and Open Philanthropy. Danny and I talk about how to understand his team's results and what they mean (and don't mean) for how we should think about progress in AI going forward. Links to learn more, summary and full transcript. Debates around the future of AI can sometimes be pretty abstract and theoretical. Danny hopes that providing rigorous measurements of some of the inputs to AI progress so far can help us better understand what causes that progress, as well as ground debates about the future of AI in a better shared understanding of the field. If this research sounds appealing, you might be interested in applying to join OpenAI's Foresight team — they're currently hiring research engineers. In the interview, Danny and I (Arden Koehler) also discuss a range of other topics, including: • The question of which experts to believe • Danny's journey to working at OpenAI • The usefulness of "decision boundaries" • The importance of Moore's law for people who care about the long-term future • What OpenAI's Foresight Team's findings might imply for policy • The question whether progress in the performance of AI systems is linear • The safety teams at OpenAI and who they're looking to hire • One idea for finding someone to guide your learning • The importance of hardware expertise for making a positive impactChapters:Rob’s intro (00:00:00)The interview begins (00:01:29)Forecasting (00:07:11)Improving the public conversation around AI (00:14:41)Danny’s path to OpenAI (00:24:08)Calibration training (00:27:18)AI and Compute (00:45:22)AI and Efficiency (01:09:22)Safety teams at OpenAI (01:39:03)Careers (01:49:46)AI hardware as a possible path to impact (01:55:57)Triggers for people’s major decisions (02:08:44)Producer: Keiran HarrisAudio mastering: Ben CordellTranscriptions: Zakee Ulhaq

02:11:3722/05/2020

#77 – Marc Lipsitch on whether we're winning or losing against COVID-19

In March Professor Marc Lipsitch — Director of Harvard's Center for Communicable Disease Dynamics — abruptly found himself a global celebrity, his social media following growing 40-fold and journalists knocking down his door, as everyone turned to him for information they could trust. Here he lays out where the fight against COVID-19 stands today, why he's open to deliberately giving people COVID-19 to speed up vaccine development, and how we could do better next time. As Marc tells us, island nations like Taiwan and New Zealand are successfully suppressing SARS-COV-2. But everyone else is struggling. Links to learn more, summary and full transcript. Even Singapore, with plenty of warning and one of the best test and trace systems in the world, lost control of the virus in mid-April after successfully holding back the tide for 2 months. This doesn't bode well for how the US or Europe will cope as they ease their lockdowns. It also suggests it would have been exceedingly hard for China to stop the virus before it spread overseas. But sadly, there's no easy way out. The original estimates of COVID-19's infection fatality rate, of 0.5-1%, have turned out to be basically right. And the latest serology surveys indicate only 5-10% of people in countries like the US, UK and Spain have been infected so far, leaving us far short of herd immunity. To get there, even these worst affected countries would need to endure something like ten times the number of deaths they have so far. Marc has one good piece of news: research suggests that most of those who get infected do indeed develop immunity, for a while at least. To escape the COVID-19 trap sooner rather than later, Marc recommends we go hard on all the familiar options — vaccines, antivirals, and mass testing — but also open our minds to creative options we've so far left on the shelf. Despite the importance of his work, even now the training and grant programs that produced the community of experts Marc is a part of, are shrinking. We look at a new article he's written about how to instead build and improve the field of epidemiology, so humanity can respond faster and smarter next time we face a disease that could kill millions and cost tens of trillions of dollars. We also cover: • How listeners might contribute as future contagious disease experts, or donors to current projects • How we can learn from cross-country comparisons • Modelling that has gone wrong in an instructive way • What governments should stop doing • How people can figure out who to trust, and who has been most on the mark this time • Why Marc supports infecting people with COVID-19 to speed up the development of a vaccines • How we can ensure there's population-level surveillance early during the next pandemic • Whether people from other fields trying to help with COVID-19 has done more good than harm • Whether it's experts in diseases, or experts in forecasting, who produce better disease forecasts Chapters:Rob’s intro (00:00:00)The interview begins (00:01:45)Things Rob wishes he knew about COVID-19 (00:05:23)Cross-country comparisons (00:10:53)Any government activities we should stop? (00:21:24)Lessons from COVID-19 (00:33:31)Global catastrophic biological risks (00:37:58)Human challenge trials (00:43:12)Disease surveillance (00:50:07)Who should we trust? (00:58:12)Epidemiology as a field (01:13:05)Careers (01:31:28)Producer: Keiran Harris.Audio mastering: Ben Cordell.Transcriptions: Zakee Ulhaq.

01:37:0518/05/2020

Article: Ways people trying to do good accidentally make things worse, and how to avoid them

Today’s release is the second experiment in making audio versions of our articles.

The first was a narration of Greg Lewis’ terrific problem profile on ‘Reducing global catastrophic biological risks’, which you can find on the podcast feed just before episode #74 - that is, our interview with Greg about the piece.

If you want to check out the links in today’s article, you can find those here.

And if you have feedback on these, positive or negative, it’d be great if you could email us at [email protected].

26:4612/05/2020

#76 – Tara Kirk Sell on misinformation, who's done well and badly, & what to reopen first

Amid a rising COVID-19 death toll, and looming economic disaster, we’ve been looking for good news — and one thing we're especially thankful for is the Johns Hopkins Center for Health Security (CHS). CHS focuses on protecting us from major biological, chemical or nuclear disasters, through research that informs governments around the world. While this pandemic surprised many, just last October the Center ran a simulation of a 'new coronavirus' scenario to identify weaknesses in our ability to quickly respond. Their expertise has given them a key role in figuring out how to fight COVID-19. Today’s guest, Dr Tara Kirk Sell, did her PhD in policy and communication during disease outbreaks, and has worked at CHS for 11 years on a range of important projects. • Links to learn more, summary and full transcript. Last year she was a leader on Collective Intelligence for Disease Prediction, designed to sound the alarm about upcoming pandemics before others are paying attention. Incredibly, the project almost closed in December, with COVID-19 just starting to spread around the world — but received new funding that allowed the project to respond quickly to the emerging disease. She also contributed to a recent report attempting to explain the risks of specific types of activities resuming when COVID-19 lockdowns end. We can't achieve zero risk — so differentiating activities on a spectrum is crucial. Choosing wisely can help us lead more normal lives without reviving the pandemic. Dance clubs will have to stay closed, but hairdressers can adapt to minimise transmission, and Tara, who happens to be an Olympic silver-medalist in swimming, suggests outdoor non-contact sports could resume soon without much risk. Her latest project deals with the challenge of misinformation during disease outbreaks. Analysing the Ebola communication crisis of 2014, they found that even trained coders with public health expertise sometimes needed help to distinguish between true and misleading tweets — showing the danger of a continued lack of definitive information surrounding a virus and how it’s transmitted. The challenge for governments is not simple. If they acknowledge how much they don't know, people may look elsewhere for guidance. But if they pretend to know things they don't, the result can be a huge loss of trust. Despite their intense focus on COVID-19, researchers at CHS know that this is no one-off event. Many aspects of our collective response this time around have been alarmingly poor, and it won’t be long before Tara and her colleagues need to turn their mind to next time. You can now donate to CHS through Effective Altruism Funds. Donations made through EA Funds are tax-deductible in the US, the UK, and the Netherlands. Tara and Rob also discuss: • Who has overperformed and underperformed expectations during COVID-19? • Whe are people right to mistrust authorities? • The media’s responsibility to be right • What policy changes should be prioritised for next time • Should we prepare for future pandemic while the COVID-19 is still going? • The importance of keeping non-COVID health problems in mind • The psychological difference between staying home voluntarily and being forced to • Mistakes that we in the general public might be making • Emerging technologies with the potential to reduce global catastrophic biological risks Chapters:Rob’s intro (00:00:00)The interview begins (00:01:43)Misinformation (00:05:07)Who has done well during COVID-19? (00:22:19)Guidance for governors on reopening (00:34:05)Collective Intelligence for Disease Prediction project (00:45:35)What else is CHS trying to do to address the pandemic? (00:59:51)Deaths are not the only health impact of importance (01:05:33)Policy change for future pandemics (01:10:57)Emerging technologies with potential to reduce global catastrophic biological risks (01:22:37)Careers (01:38:52)Good news about COVID-19 (01:44:23)Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

01:53:0008/05/2020

#75 – Michelle Hutchinson on what people most often ask 80,000 Hours

Since it was founded, 80,000 Hours has done one-on-one calls to supplement our online content and offer more personalised advice. We try to help people get clear on their most plausible paths, the key uncertainties they face in choosing between them, and provide resources, pointers, and introductions to help them in those paths. I (Michelle Hutchinson) joined the team a couple of years ago after working at Oxford's Global Priorities Institute, and these days I'm 80,000 Hours' Head of Advising. Since then, chatting to hundreds of people about their career plans has given me some idea of the kinds of things it’s useful for people to hear about when thinking through their careers. So we thought it would be useful to discuss some on the show for everyone to hear. • Links to learn more, summary and full transcript. • See over 500 vacancies on our job board. • Apply for one-on-one career advising. Among other common topics, we cover: • Why traditional careers advice involves thinking through what types of roles you enjoy followed by which of those are impactful, while we recommend going the other way: ranking roles on impact, and then going down the list to find the one you think you’d most flourish in. • That if you’re pitching your job search at the right level of role, you’ll need to apply to a large number of different jobs. So it's wise to broaden your options, by applying for both stretch and backup roles, and not over-emphasising a small number of organisations. • Our suggested process for writing a longer term career plan: 1. shortlist your best medium to long-term career options, then 2. figure out the key uncertainties in choosing between them, and 3. map out concrete next steps to resolve those uncertainties. • Why many listeners aren't spending enough time finding out about what the day-to-day work is like in paths they're considering, or reaching out to people for advice or opportunities. • The difficulty of maintaining the ambition to increase your social impact, while also being proud of and motivated by what you're already accomplishing. I also thought it might be useful to give people a sense of what I do and don’t do in advising calls, to help them figure out if they should sign up for it. If you’re wondering whether you’ll benefit from advising, bear in mind that it tends to be more useful to people: 1. With similar views to 80,000 Hours on what the world’s most pressing problems are, because we’ve done most research on the problems we think it’s most important to address. 2. Who don’t yet have close connections with people working at effective altruist organisations. 3. Who aren’t strongly locationally constrained. If you’re unsure, it doesn’t take long to apply, and a lot of people say they find the application form itself helps them reflect on their plans. We’re particularly keen to hear from people from under-represented backgrounds. Also in this episode: • I describe mistakes I’ve made in advising, and career changes made by people I’ve spoken with. • Rob and I argue about what risks to take with your career, like when it’s sensible to take a study break, or start from the bottom in a new career path. • I try to forecast how I’ll change after I have a baby, Rob speculates wildly on what motherhood is like, and Arden and I mercilessly mock Rob. Chapters:Rob’s intro (00:00:00)The interview begins (00:02:50)The process of advising (00:09:34)We’re not just excited about our priority paths (00:14:37)Common things Michelle says during advising (00:18:13)Interpersonal comparisons (00:31:18)Thinking about current impact (00:40:31)Applying to different kinds of orgs (00:42:29)Difference in impact between jobs / causes (00:49:04)Common mistakes (00:55:40)Career change stories (01:11:44)When is advising really useful for people? (01:24:28)Managing risk in careers (01:55:29)Producer: Keiran Harris. Audio mastering: Ben Cordell. Transcriptions: Zakee Ulhaq.

02:13:0628/04/2020

#74 – Dr Greg Lewis on COVID-19 & catastrophic biological risks