Hello and welcome to the A16Z podcast.I'm Lauren Richardson.The COVID-19 pandemic has increased the visibility of scientists and the scientific process to the broader public.

Suddenly, scientists working on virology and infectious disease dynamics have seen their public profiles expand rapidly.

One such scientist is our special guest in this episode, Trevor Bedford, an associate professor at the Fred Hutchinson Cancer Research Center.

An expert in genomic epidemiology, he and his collaborators built Nextstrain, which shares real-time interactive data visualizations to track the spread of viruses through populations.

A16Z BioDeal team partner Judy Savitskaya and I chat with Trevor about how genomic epidemiology can inform public health decisions, viral mutation and spillover from animals into humans, and what can be done now to prevent the next big pandemic.

But first, we discussed the shifts in scientific communication to preprints and open science, a topic we will dig deeper into on future episodes.

While these pre-publication and pre-peer-review research articles speed the scientific process by sharing results early, they can also lead to misinterpretation and misinformation.

Everyone loves the alliteration of the promise and the peril of preprints.And I think this is the perfect use case of that.

We've seen a lot of work, not peer reviewed, but out in the world and making a huge difference for the positive and really driving the science and the public health decisions.

But on the flip side, we're also seeing the preprints go viral that end up really had their claims tempered.

And so I'd like to talk to you about kind of your personal experience with this and how you think about that balance between promise and peril.

Yeah, this has obviously been very challenging.As a working scientist, it's phenomenally helpful for my own ability to do science.

If we look at how quickly the things move in this pre-print space, my own understanding of the pandemic and of SARS-CoV-2 is perhaps a month ahead of where it would be if I just had to look at the peer-reviewed literature.

where the peril comes in and how that interacts with public understanding and media and so forth.So part of the issue, I think, is that we do have things that they land as a preprint.

It's frustrating when that is then attached to a press release compared to something where the preprint is really aimed at the scientists and the scientific discussion.

And it totally gets around the scientific community without needing to have a press release.You also have this issue where there'll be other papers that appear that are in peer-reviewed journals, but are bad papers and are wrong.

Like just as a silly example, the snake flu paper asserting that somehow it came from snakes that appeared in a peer-reviewed article.

And then by having that peer-reviewed stamp, those get run with even farther by media as like, this is true because it's peer-reviewed when peer-reviewed doesn't actually mean truth.

It's sort of a false badge of honor.

And so in some ways, I actually kind of like the fact that the preprints are attached with big warnings that we don't know if this is true or not.And that makes media perhaps take it with more of a grain of salt the way that all papers should be.

But it is still a very challenging interaction. And I think you could probably say that, on average, if you see a peer-reviewed paper, it is more likely to be true than preprint.

But that variance is big enough that you can't just rely on that badge to make that decision.

Yeah, there are error bars on that. I am a big fan of preprints, but I see them much more as a tool for the scientific community and not as much for wider dissemination.

Exactly.I mean, I do that myself where I basically don't pay attention to preprints in say the medical countermeasures because I don't know what's going on there.I really paid more attention to what's published in AGM and Lancet and so forth.

Whereas in the viral evolution world, epi-modeling, I pay attention to pre-prints and published articles.And then I don't really pay attention to even where they're published.I can just pay attention to the underlying science.

And I don't need to rely on the kind of external validation of things.

You're kind of your own peer reviewer.Yes.But you need to have the training to kind of hone that BS sensor to some extent.And in the field that you're an expert in, of course, that's going to be very finely tuned.So you're ready to go.

I like that framing a lot.And this then goes just as much for Twitter as it goes for bioRxiv.If we go back to Twitter, in January, there was this amazing period where there was full, like really open scientific discussion.

And then as we went forward, the scientists got very popular on Twitter and everyone's listening into these conversations and it has made people more careful so they don't speculate quite as much and have that open science Twitter conversation be a little more careful and more grounded.

Do you think that's a good thing to have a little less speculative or do you miss that openness?

Yeah, I miss the openness.I think it's necessary for how things are and for scientific transparency to the general public.But there's not another venue for scientists to have these more speculative conversations.

I think it's actually a really good point because the speculation is super valuable.That's how you get creative new hypotheses made.

And, you know, sometimes the speculation is way off, but it actually sparks an idea that you wouldn't have otherwise thought of.

So I think there's a tension between allowing for that creativity and making sure there's sort of a tempering statement, a qualifier, maybe like a disclosure afterward, like this is pure speculation.

those speculative preprints that might well be wrong, I think, and still be valuable to scientists in the field.But then you get this knock-on effect when things get run within the media, and that creates the challenge.

Yeah, I think what scientists are actually pretty good at is, in general, is stating the probability that something is true, or sort of when something is speculation, when it's not, or a suggestion rather than a statement about a certain fact.

it doesn't always translate because it's just part of our training as scientists, but it's not necessarily part of people's training as readers.

Yeah, that's a really good point.And I think that what I've seen on the kind of media side is there's this big tendency, maybe justifiably, to think that the newest piece of data will just supersede everything else.

And then the new study comes out, you ignore all the studies and you go with the new thing.

rather than the normal scientific approach where you have a prior that's kind of being continually adjusted through time and the new study might push you in a bit of a direction one way or the other, but it doesn't supersede other things that have existed.

I'm kind of curious to talk about the psychology of preprints.So from the perspective of a scientist in the lab, I mean, the biggest fear you have is that you're wrong.

And in the past, it seems like the peer review process is one of the ways that you can feel a little bit more confident about your results.And then furthermore, if you are wrong, you share the blame with the journal and the peer reviewers.

In this case, how do you see your students or your peers considering the possibility of doing a preprint?

Is it something that is becoming more normalized and maybe because of this COVID science burst in the last couple of months is going to become more standard and less scary?

Or do you think that there's sort of an inherent psychology that is still going to make preprints a little bit difficult to get to?

Yeah, it's a really good way of putting it.My own policy is that we submit a preprint when we submit to the journal.So we have a paper where we're confident enough in it that we think it can be shared with a journal.

We think now it can be shared with the world.But in this open science way, we have an evolving analysis on GitHub that people can look in on.And that's more obviously a work in progress.

But once it gets to the preprint stage, it is something that you've stamped your name on.

Yeah, I think there's the preprint side of this, and then there's the open science side of this and how we've seen this push for open science, push for making everything available, workable, readable, machine learnable, and this level of collaboration.

So do you think that this is going to be a onward trend going forward?Or what do you think could continue that drive after this kind of initial pushes?

So what open science has generally allowed to a decent degree is a push away from the only benchmark being a paper on your CV in a published journal.Then you can have other scholarly outputs that are deemed important.

So data sets that you've produced, code that you've written, protocols that you've contributed, and then to have all of those things count towards what you're doing as a scientist.

and to kind of make those available rather than only having everything kind of funneled into papers.And so I think this is genuinely very, very helpful and a really good direction for science to be in.

And I think that that push for products rather than papers is something that I associate with open science and is, I think, remarkably helpful for our pandemic understanding.

So for where you're doing the work in progress on GitHub, is there actually collaboration happening that you're not initiating?

That's all happened.A paper that I wrote back in 2012, the simulation-based model for influenza evolution, went up on GitHub.

and then someone else was able to pick up that code, run with it, and they wrote a paper after adding the things to that code, that model.

And then subsequently, graduate students in someone else's lab ran with this now derived thing, got in touch with me, and then we wrote a paper together using now the model I've been pushing along a couple of stages.

Originally for NextFlu, NextStrain, I had put up the very early prototype online

And then Richard Nair picked it up, hacked on that separately, and then we merged things back together when it was clear he was interested and we were both making progress on things.

So I think there are definitely examples where just having the code up has resulted in these kind of happy coincidences of collaboration.

Yeah, it's a really organic way of sparking collaborations.

So let's talk about Nextstrain.And I would love to hear what you had been working on and then how you've pivoted to focus on SARS-CoV-2.

Yeah, so NextStrain came out of NextFlu.NextFlu was invented back in 2015.We started to get much more real-time flu data shared from groups from all over the world.

There's a WHO effort that's been going on for years that is quite an amazing surveillance platform for global flu diversity, which is designed around picking vaccines every six months.

And so we were able to kind of tap into that data sharing and then have an analysis lay of what does flu look like this this week.

And then that's that's continued and that's been a kind of a very productive line of research of trying to to inform flu vaccine strain selection. Then also in 2015, we had the West African Ebola outbreak.

And so then we had at first kind of a hacky version of NextFlu that was designed for Ebola.

People were sharing data from sequencing and then rather than waiting for publication, we just kind of pull things together and have this online dashboard to see what's going on.So then this seemed like it had legs.

And so we went back and kind of did a full rewrite of things in 2016. That was in time for Zika, which we got working for that, and then kind of have been pulling in more and more outbreaks and viruses as they've happened.

Pretty happy where we were in 2019, and we were, I think, pretty well prepared come January.

And so then with SARS-CoV-2, having all of this genomic data shared ended up kind of just working well with these systems, able to immediately get things online and analyze.

I think the big difference with SARS-CoV-2 was mainly on the data generation side, where it was possible for the first time, actually quasi real time,

So kind of mini situations where there'd be someone sick, a sample taken from them, and getting a genome shared in a week, in a few days, versus an Ebola in 2015, that was generally months or a year.

So what can you learn from all this genomic information?

So the usual analogy that I use is a game of telephone where people are whispering a word to each other that changes along the line to some degree.

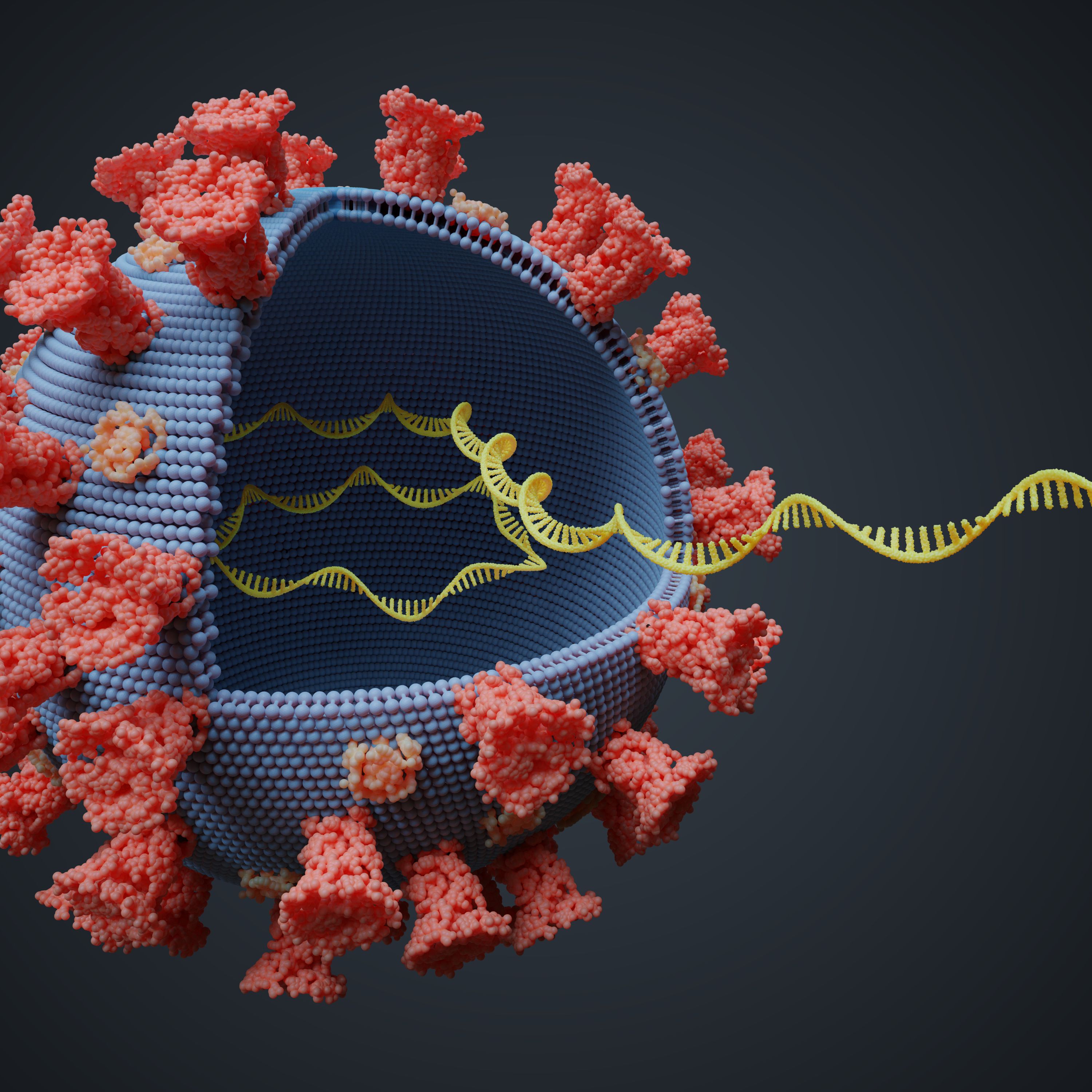

If you were to then go in and ask some fraction of people what they heard, you could go and reconstruct that who talked to who, who infected who transmission chain.For SARS-CoV-2, about once every two weeks, you'll get a mutation.

And for flu, it's about once every 10 days.We can use these mutations to be able to kind of say that this cluster of viruses led to this cluster of viruses led to this cluster of viruses.

And then often, that's very useful in terms of how did the virus get from China into Europe, into the US, and kind of these slightly larger epidemiological questions.And this field has become known as genomic epidemiology.

STEPHANIE DESMOND-HODGMANN How can genomic epidemiology inform public health decisions?

Genomic epi is particularly helpful when your surveillance infrastructure is spotty.If you're not catching every case, you can learn a lot from just sequencing a handful of genomes.And so what we saw for SARS-CoV-2 is that

that we're able to kind of identify community transmission through the genomics in a way that was perhaps easier to do, or at least orthogonal to the case-based counting and how many travel cases versus non-travel cases do we have.

So we saw this with the US and with partners at the Medical Research Institute in the Democratic Republic of the Congo and Kinshasa.

where they had been set up to sequence Ebola, they were able to sequence their SARS-CoV-2 samples, and identify community spread within Kinshasa in a kind of fashion that might have been difficult to ascertain if you just had cases.

And so I think that was very helpful at the early stages here.

What I think will be particularly helpful is if we look, say, state to state, how much transmission is happening endogenously, how much is kind of driven by new infections accruing within the state versus how much of an issue are importations and kind of continuing to drive things.

And then that matters for kind of how well your local control efforts are working.

And so that gives you information potentially about the types of activities that are causing transmission or not, and to what extent a given state is exposed to what the states around it, or states that have a lot of travel between them, how much their exposure is due to that.

Yeah, exactly.Fundamentally, the questions aren't different than epi questions.It's just kind of a new approach and new data source to answer these questions.

How's the sequence getting to Nextstrain?

At this point, we don't house any of the SARS-CoV-2 data ourselves.We rely on GISAID and on GenBank to house that data.

GISAID is basically a database with some community norms that surround it, and then scientists from all over the world can then download that data and do analyses and so forth.

So it's the central database for these sequence strains that the next strain provides, like kind of the higher level analysis.

What are the mechanics of how this happens?Is it like the physician takes a sample, and then there's the local epidemiologist that does the sequencing?

Yeah, this is kind of evolving.There is often, but not always, a connection between public health and an academic research group.

What will happen is a diagnostic lab will be receiving specimens to do PCR on to say whether or not they're viral positive.And then often those specimens that are viral positive will be then shared with a lab that does the sequencing.

So globally, this turns out to be pretty bottoms up.

This is individual partnerships between academic institutions and clinicians that end up creating these samples, rather than a sort of top-down effort where a public health organization within a government is saying, we would like to have all of the samples sequenced.

So that's really interesting.Do you think that because of that, you're getting kind of a spotty view of what strains are out there?

Like you're seeing the strains that happen to arrive at academic hospitals, that's a subset of the patients, that's not the full population?

Yeah.For the most part, it definitely is these smaller operations.There's a few larger things, like the Cog Consortium in the UK is kind of operating through NHS and have things quite centralized.Whereas in other places, yeah, it is much more spotty.

I think there it's less about the particular hospital and more about the general geography.

We'll have situations like where Washington, Wisconsin, Connecticut are well sequenced in the US, but perhaps some other states are not quite as well sequenced.That gap is being bridged over time, but that definitely exists.

And it makes some of the interpretations difficult, where if you're missing samples from a neighboring state, it might be difficult to understand what's actually going on with transmission.

Right, if there's like a jump through a different state, you're not going to be able to catch that.

Exactly.And so what we're trying to do now is to get groups in public health set up with their own kind of local Nextstrain instances so they can run their, say, Utah, Washington states, some local geography that is interesting.

And there might be more metadata that can be shared because the stuff that can be shared to the public database is quite limited.And then you can look at what's happening with transmission in your local area.

So more distributed and more finely tuned to localities.

So it seems like in the ideal world, you start sequencing more and more of these patients.And is there a future where we're actually doing even the diagnosis step by sequencing?

Yeah.Sequencing as diagnostics has a lot of potential.So right now with diagnostics, you have to kind of have a panel where when this person presents, we're going to look for flu and RSV, or we're going to have some maybe slightly broader panel.

Whereas if you move to sequencing as diagnostics, you'd just be able to sequence whatever's in there.And then that would help to discover these novel pathogens and so forth.

So you're not going one by one.You're sort of doing a pan-pathogen screen.Exactly.Yeah.I think there's also just such a big advantage in terms of accuracy.We've been hearing a lot about false negative and false positive rates for COVID tests.

But if you're doing sequencing, it's really kind of hard to misinterpret a sequence.There's so much information in there

unless you've had some sort of issue with switching samples or any sort of sample errors, the test itself is very unlikely to give you an error.

I'm thinking of our inherent virium, so all the viruses that we carry as part of our microbiome.

It might be hard to actually know what the disease-causing virus would be or the disease-causing agent, because we have this huge garden of different microbes that are part of our physiology.

Yeah, this is very true, where if you find a few reads from some random virus, how do you know that this is causal to the symptom presentation?

Would that be the only con, or is there, I mean, other than obviously cost is prohibited right now, but is there any other drawbacks to sequencing as diagnostic?

I think right now, the big drawback would be in latency.There's not a good protocol that would kind of get you to a positive-negative result as quickly as just doing PCR.There's some really exciting stuff happening here.

So there's this SwabSeq protocol that Sri Kosuri has developed.

Yes, I don't know if you've spoken to them.

Well, we're investors.They just launched.

Awesome.Where you can use sequencing to really scale up your diagnostics.And I think that's exciting.So in that case, perhaps not getting full genomes out of things, but at least using the sequencing as a readout instead of PCR as a readout.

I've been fielding a lot of questions from friends and family on the virus is mutating.What does this mean?Does this mean we're all screwed?So how have you been dealing with that in your communication with the public or with your friends and family?

So generally natural selection acts to purge diversity, that mutations generally break things and natural selection does its best to kind of keep those weeded out from the population.

And then it's much more rare to find that kind of a mutation appears that actually causes things to be more fit and to take off.

Right.The viral genome has evolved over billions of years to be this perfectly functioning, tight little perfected package.And so mutations are more likely to mess that up than to make it better.So you usually see the mutations being removed.

So we've seen this with every epidemic that we've dealt with, this has come up.It was very acute in the West African Ebola outbreak.There's a mutation that happened early on in the outbreak.

It happened along the introduction from Guinea into Sierra Leone, and that lineage really took off and caused much of the infections.

You could even measure that mutation and see that it actually, in cell culture, caused better binding to human cells than bat cells. And so people thought that, yes, that's an example where that mutation was causing Ebola to be more transmissible.

But then they actually did experiments in animal models and then found that that mutation no longer held up as actually affecting things. So generally, my prior for almost all of these is quite low.

That if we see a mutation, even if it's high frequency, it's probably doing nothing.It doesn't mean that it's definitely doing nothing, but that generally my prior is low.And in general, that SARS-CoV-2 is a bad enough virus to deal with anyway.

That even if you had a slight increase in transmissibility, that could evolve.It's not going to be large compared to how transmissible it already is.

Yeah, linking to the evolution of viruses is the idea that very virulent viruses that kill their hosts tend to evolve to become less virulent because viruses don't actually want to kill their hosts because that's the end of the viral transmission.

If they can evolve to become highly transmissible but not deadly, that's kind of the ideal virus goal.

Yeah, this is a really good point.And it's played out with a lot of study of what's called a viral setpoint load for HIV, where what we've seen for HIV is that you can evolve strains to have infections that have more virus in them.

And you should have something where strains that have more virus and infection, a higher viral load, will transmit more quickly and will then take over the population.

But what we've seen for HIV over the last 80 years is that that's kind of held at an equilibrium by the virus.Because if it has too high of set point load, that kills the host too quickly.And then that is selected against.

And so what ends up being the case for HIV is you get this intermediate set point load as the best phenotype for the virus to transmit.With SARS-CoV-2, it's not clear whether evolution would reduce its viral load.

It might have less severe symptoms, more pre-symptomatic, asymptomatic transmission, which might be better for it, and increased spread.

Or it might be evolutionary advantageous for the virus to just have higher viral load and then be able to infect more contacts in those kind of days three to five before symptom onset.

And so it's not clear from first principles even which direction evolution would push the virus in in this case.

Actually, thinking to Ebola and MERS even, like highly, highly deadly viruses, why do you think it is that they haven't evolved to basically be less deadly?

So MERS, almost all the sustained evolution is happening in camels, or it's kind of X, the common cold in camels in the Arabian Peninsula.And so the virus is really just trying to be good at replicating and transmitting within camels.

When it spills around humans, there are short transmission chains that die out.

And the reason that it's so deadly in humans is that MERS is really good at binding to the lower respiratory tract and it gets to be these kind of deep respiratory infections that are quite dangerous.

But also because of that, it's not very transmissible just kind of biophysically.It doesn't really get out of the lower respiratory tract and able to infect the next person. And so there's kind of this fundamental limitation.

Why we apparently didn't see very much evolution one way or the other for attenuation or for virulence in the case of West Africa, where we had very long sustained transmission chains is maybe more of an open question.

The easiest resolution there is Ebola.It's non-trivial for the virus to evolve to be better at transmitting or worse at transmitting.And so it's just not able to kind of make that step easily.

When you're looking at your genomic epidemiology data, how can you distinguish the difference between selection, so like a mutation being better, versus that just happened to be the variant that seeded that original?

ELANA GORDON-REED It's a founder population, yeah.

Yeah, that's a huge issue, and there's not an obvious resolution to it.So this is most acute right now with, again, this D614G mutation and spike protein, where that happened to coincide with the virus that founded the European outbreak.

And so we see that the G variant increases in frequency through the globe, but it's unclear how much could be due to some degree of natural selection versus just founder effects and epidemiology.

Yeah, there's another difficulty here.Because the virus is traveling geographically, you have different populations and different susceptibilities.

Like, perhaps the European population is just genetically predisposed to getting infected by a virus with that mutation.It's so hard to detangle all of these different effects from, yeah, within the genomic data.

Yeah, the virus isn't the only factor that influences this.It's also the differences in the human genome between populations.

Mm-hmm.I think that people are looking at this.So there's kind of GWAS studies that are going on where you're looking for human genetic variants and how they might impact viral severity.So far as what I've seen, nothing has come up yet.

It doesn't mean that nothing will.For instance, for flu, they're able to find a couple variants that did impact severity.But generally, I'd suspect that most of the differences will be much more about human behavior than about human genetics.

Yeah, we were just talking this morning about how differences in speech patterns can determine how much expectorate you have and how that can influence the spread of the virus, which I think is just wild.

I have been really fascinated with the idea of viral spillover lately.How are mutations and spillover linked?

We have these situations where generally viruses will have a reservoir of natural hosts that they're well adapted to.

And then you have this risk that when humans are encountering that animal reservoir in some fashion, that you have a spillover event, and then you have something that can then replicate and transmit within humans.

What the main active question is, is how much of that ability to then go from human to human

is, quote, pre-adaptation, has already just been, by happenstance, already adapted in the reservoir, versus once you get into that first human, then you get adaptive evolution that happens during that infection to then be able to spread and transmit.

For a virus to become a transmissible strain, it's got to do two things.It's got to evolve the ability to infect human cells.And then it also has to evolve the ability to go from human to human.

And it can develop that ability to transmit human to human actually before it adapts the ability to infect a human cell.

Yes.If we look at avian flu, say H5N1,

There have been hundreds of spillover events into humans, and then a high mortality rate when those occur, but we basically haven't seen the virus able to, once it gets into that first person, able to quickly evolve and then be able to go from human to human.

If we look at MERS virus, we have occasional spillover events from camels, and then it will cause an outbreak of 50 or 100 sort of cases, somewhere in there, but it basically ends up being self-limiting.

And then during that time, we still don't see evolution of the virus to then get more transmissible. versus what seemed to happen in SARS-CoV-2, where whatever sparked in late November, early December, just continued spreading at that point.

It's conceivable that first person was infected with a virus that is less human-to-human transmissible, and the next person, you get kind of a small ratchet, and by the time you hit the end of November, you have something that's quite transmissible.

But exactly in that kind of interface of how many steps of evolution happen in those first infection or infections, to cause these very human-to-human transmissible epidemics is actually quite unknown.

So that is one scenario where that's why SARS-CoV-2 was so transmissible apparently very early on, is because it had pre-adapted to be transmissible within humans before it jumped into humans.And then it was like, boom, off to the races.

Exactly.That seems to be what we've seen in flu pandemics and other situations as well, that a lot of the time it is this pre-adaptation, or at least some degree of pre-adaptation, that really causes things to take off.

Do you think that's something that we could elucidate, like, forensically after the fact?

Like, could we go back and look at those first couple of cases, and either through genomic analysis or through just looking at what the expected infection rate would have been?

Can we figure out whether it actually mutated for human transmissibility so quickly?

Yeah.Yeah.So SARS-CoV-2 in the receptor binding domain in the spike protein has, I think, six key mutations that kind of shift its binding from being

preferentially binding bat-like ACE2 receptors to human-like ACE2 receptors, which are also cat-like and pangolin-like and so forth.

We know that there's been a shift there and those mutations are quite important, but that could have been that those occurred a while ago to bind to its intermediate host of pangolins or cats and then it was already pre-adapted when it first emerged into humans.

Or it might have been part of the way along there, and that last couple mutations could have happened in that early transmission cycle.And I don't think we'll ever be able to know that unless we can somehow dredge up those samples.

Because generally, in any of these sorts of outbreaks, it's very rare to catch the actual patient zero.

Right.You wouldn't even know to catch them.

So let's talk about infectious disease surveillance and spillover prediction.Is there any way we can predict these and prepare for these now?

I think that having some sequencing and knowledge of what's circulating in an animal reservoir is very appropriate and should be done, even if we were to suddenly have the sequences

from every virus that's in the entire world in the animal reservoir, we're then going to be left not knowing which ones are actually a threat to humans compared to other ones.

I think really where the public health pandemic preparedness investment should be in is stamping out outbreaks quickly and being able to very soon after you have that patient zero, that index case, be able to recognize that, yes, this is a novel pathogen.

We need to to make sure this doesn't spread.And to be able to have that be very reactive and able to kind of identify those cases early on is, I think, the pragmatic way to attack this.

STEPHANIE DESMONDS-DOMINGUEZ So as we're thinking about how to prepare for the next outbreak, the next spillover event, how do you think that could be improved?And what kind of tools would you want to see on the public health side?

Yeah, I think this is extremely challenging problem just from a kind of data amalgamation sort of issue.

The way that reporting works is still quite finicky and it's relying on, in many cases, spreadsheets and kind of having that work well where you can have this sample test positive for this disease, have that flow to public health in a very transparent, easy fashion.

attach to appropriate metadata and have that all work is super challenging.

We kind of experienced this to a large degree with the Seattle flu study work that we were doing, where we were trying to collect and sequence a bunch of different respiratory infections.

And so we had to stand up some of these systems ourselves to be able to just register these things about patients and so forth and pulling electronic medical records data, which is difficult.And so we want systems that can amalgamate that data

it really doesn't exist well.

If we look at the necessity behind, say, the COVID tracking project to be able to even just count tests that are occurring, we can kind of see how challenging that is, let alone having the metadata that you don't want to attach to everything.

Yeah, I was talking to someone from the Gates Foundation about how malaria cases are counted.And it's like in paper ledgers, which get put in a spreadsheet every quarter.

And it's like to have that kind of actionable data, you need to know what's happening today, this week, not what was happening three months ago.The ability to make change happen is completely delayed by that.

I do think it's interesting how the genomic side has kind of been pushing this a bit, where each kind of genome sequence is this nice atomic thing that can then be shared and can have metadata surrounding it in a fashion that is perhaps a little more difficult to share many other kind of data sources.

And I don't know how to kind of import some of that back to, say, case counts, but it has been interesting seeing kind of how far in front the genomic side has been in this.

Trevor, thank you so much for joining us on the A16Z podcast.We really enjoyed our conversation with you.

Thank you, Lauren.And thank you, Judy.

Sign in

Sign in Sign in

Sign in Sign in

Sign in